Introduction to Multimodal AI

Multimodal AI is the next frontier in artificial intelligence, enabling machines to process and understand information from multiple sources simultaneously, such as text, images, audio, and video. Unlike traditional AI models that focus on a single type of input, multimodal AI models integrate diverse data streams, offering richer insights and more accurate results.

👉 Learn More: What Is Artificial Intelligence Technology? A Complete 2025 Guide

For instance, a multimodal AI system can analyze a photo, interpret a spoken question, and provide a detailed response by combining visual and textual information. This makes interactions with AI more natural and human-like, bridging the gap between digital understanding and real-world complexity.

👉 “According to insights from IBM Research, multimodal AI can be understood as an approach where machines integrate and interpret different forms of data—such as language, images, audio, and video—within a unified system to generate more accurate and context-aware results.”

Applications of multimodal AI are expanding rapidly. In healthcare, it can evaluate medical images alongside patient records for better diagnoses. In creative industries, it powers tools that generate captions, videos, or music by connecting different forms of input. Customer service is another area where multimodal AI enhances experiences by interpreting speech, text, and facial cues simultaneously. The benefits of multimodal AI are clear: improved accuracy, context-aware decision-making, and more intuitive human-computer interactions. As AI continues to evolve, multimodal AI models are set to transform industries, making technology smarter, more adaptive, and capable of understanding the world in ways that were once impossible.

📌 Overview of Multimodal Learning in AI

Multimodal learning in AI refers to the ability of intelligent systems to process and combine information from different types of data, such as text, images, audio, and video. Instead of analyzing these inputs separately, multimodal AI integrates them to create a richer and more complete understanding of context. For example, while traditional AI might analyze a medical report as plain text, multimodal AI can connect that report with X-ray images, voice notes from doctors, and even patient history, producing deeper insights. This approach mirrors how humans naturally interpret the world—by using multiple senses together—making it a significant step toward building more human-like, adaptable, and intelligent machines.

What is Multimodal AI and How It Works

Artificial Intelligence (AI) has evolved far beyond simple text recognition or image classification. One of the most exciting advancements in recent years is Multimodal AI, a type of AI system designed to understand and process information from multiple sources simultaneously—such as text, images, audio, video, and sensor data. Unlike traditional AI models, which are limited to one form of input, multimodal AI can merge insights from various types of data to produce richer, more accurate, and context-aware outputs.

At its core, multimodal AI mimics the way humans perceive the world. When we look at a picture and read its caption, or listen to a video while observing the visuals, our brains integrate multiple forms of information to create a holistic understanding. Similarly, multimodal AI models combine different data “modalities” to interpret information in a more human-like way. This capability allows the AI to handle more complex tasks that single-modality models cannot, such as generating detailed descriptions of images, answering questions based on video content, or providing real-time analysis of audio and visual data together.

How Multimodal AI Works

Multimodal AI works by using specialized architectures that can process and combine data from various sources. Typically, each type of data—text, images, audio, or video—is first processed by a dedicated model trained for that modality. For instance, images might be analyzed using convolutional neural networks (CNNs), text using transformers or large language models (LLMs), and audio using recurrent neural networks (RNNs) or audio transformers. After individual processing, the outputs from these models are merged in a unified representation space, allowing the AI to understand the relationships between different modalities.

👉Learn More: Neural Networks Explained: Ultimate Beginner’s Guide 2025

👉 “The foundation of multimodal AI lies in deep learning models for multimodal systems, which enable machines to process text, images, audio, and video together, creating more accurate and context-aware insights.”

This process often involves feature extraction, where key patterns and insights are identified from each input type. Once extracted, the AI uses fusion techniques to combine these features effectively. Some systems use early fusion, combining raw data before processing, while others use late fusion, merging processed features for decision-making. The choice of fusion method depends on the application and the type of data being used.

Real-World Applications

Multimodal AI is no longer just a research concept—it is increasingly applied in practical scenarios across industries. In healthcare, multimodal AI can analyze medical images alongside patient history and lab results to improve diagnostic accuracy. In customer service, AI agents can interpret spoken questions, text queries, and visual information to provide faster and more accurate assistance. In creative industries, multimodal AI powers tools that generate music, video captions, or multimedia presentations by connecting visual, textual, and audio inputs.

Autonomous vehicles are another prominent example. Self-driving cars rely on data from cameras, LiDAR sensors, radar, and GPS to navigate safely. Multimodal AI systems integrate all these inputs to make real-time decisions, detect obstacles, and predict traffic patterns—tasks that single-modality models would struggle to handle.

👉 “Fusion techniques in multimodal AI are the core methods that bring together information from text, images, audio, and video, enabling the system to create a unified understanding for smarter and more accurate results.”

Benefits of Multimodal AI

The advantages of multimodal AI are substantial. By combining multiple data sources, it improves accuracy and context understanding, reduces the chances of misinterpretation, and enables AI systems to handle more complex, real-world problems. It also enhances human-computer interaction, as users can provide input in multiple forms, such as speaking a query while pointing to an object, and the AI can understand both simultaneously.

Furthermore, multimodal AI encourages innovation across sectors, enabling applications that were previously impossible. From advanced medical diagnostics to immersive virtual assistants and interactive learning platforms, this technology is redefining what AI can do.

Challenges and Future Outlook

Despite its potential, multimodal AI faces challenges. Integrating diverse data types requires sophisticated models and significant computational resources. Ensuring data alignment, managing missing or noisy inputs, and maintaining real-time performance are ongoing technical hurdles. Privacy and ethical concerns are also critical, especially when dealing with sensitive personal data across multiple modalities.

However, the future of multimodal AI is promising. As models become more efficient, accessible, and capable of handling increasingly complex data, we can expect a new generation of AI applications that understand the world more like humans do—holistically, intuitively, and intelligently.

Applications of Multimodal AI

Artificial Intelligence is rapidly advancing, and one of the most powerful developments is Multimodal AI—systems capable of processing and combining different types of data such as text, images, audio, and video. Unlike single-modality models, which focus on only one kind of input, multimodal AI understands the world in a more human-like way by merging multiple information streams. This capability is unlocking a wide range of real-world applications across industries.

1. Healthcare and Medical Diagnosis

One of the most impactful applications of multimodal AI is in healthcare. Traditional AI systems could only process one type of data, such as medical scans or patient notes. Multimodal AI, however, can analyze X-rays, MRI scans, patient history, and lab results together. By combining these sources, doctors receive more accurate diagnoses and treatment recommendations.

For example, an AI model can detect patterns in an MRI scan, cross-check them with a patient’s symptoms, and correlate the findings with medical literature. This not only improves diagnostic accuracy but also saves time for healthcare professionals, leading to faster treatment decisions.

2. Customer Service and Virtual Assistants

In customer support, multimodal AI is transforming how businesses interact with clients. Traditional chatbots rely only on text input, which limits their effectiveness. But multimodal systems can handle voice, text, and even images.

For instance, a customer could upload a photo of a damaged product, describe the issue verbally, and the AI assistant would combine both inputs to provide an immediate solution. This makes customer interactions smoother, reduces waiting times, and creates a more human-like support experience.

3. Education and E-Learning

Multimodal AI is enhancing the education sector by creating more personalized and engaging learning environments. Educational platforms can integrate video lectures, written materials, speech recognition, and interactive quizzes into one seamless system.

Imagine a student watching a science video, asking questions verbally, and receiving both text and visual explanations in response. Such interaction helps different types of learners—visual, auditory, and reading-based—grasp concepts more effectively. Teachers can also use multimodal analytics to track student performance across various inputs, making the learning process smarter and more adaptive.

4. Autonomous Vehicles and Smart Transportation

Self-driving cars depend on multiple sources of data, such as cameras, LiDAR sensors, radar, and GPS. Multimodal AI combines all these inputs to make real-time driving decisions. For example, it can detect pedestrians visually, interpret traffic signs, estimate distances using LiDAR, and synchronize data with GPS maps.

📌 Learn more: NVIDIA’s AI for autonomous vehicles

This combination allows autonomous vehicles to understand their environment in a holistic way, improving road safety and efficiency. Beyond cars, multimodal AI is also being applied in smart traffic management systems, where it analyzes live camera feeds, vehicle data, and weather updates to optimize traffic flow.

5. Creative Industries and Content Generation

Multimodal AI is revolutionizing content creation. Tools powered by this technology can generate captions for images, create videos from text prompts, or even compose music by analyzing sound and visual patterns. For instance, platforms can generate a video summary of a news article or design digital artwork based on descriptive text.

📌 Example: OpenAI CLIP for multimodal creativity

This is particularly useful for marketers, educators, and media companies, as it allows them to produce high-quality content quickly. By blending text, visuals, and audio, multimodal AI brings creativity and efficiency together in ways that were once unimaginable.

6. Security and Surveillance

In the security domain, multimodal AI plays a crucial role in fraud detection and surveillance. Banking systems can combine transaction logs (text data), voice authentication (audio), and facial recognition (image/video) to verify a customer’s identity. This layered approach makes it harder for fraudsters to bypass security measures.

In public safety, multimodal AI integrates data from security cameras, sensors, and audio recordings to detect unusual activities. For example, it could recognize suspicious behavior in a crowded area while simultaneously picking up warning sounds like alarms or shouting, enabling faster response times.

7. Healthcare Wearables and Smart Devices

Modern wearable devices like fitness trackers and smartwatches collect multimodal data, such as heart rate, motion, sleep patterns, and voice inputs. Multimodal AI processes this information to provide users with health insights, early warnings about potential issues, and personalized fitness recommendations. This application bridges healthcare and lifestyle, empowering individuals to manage their well-being proactively.

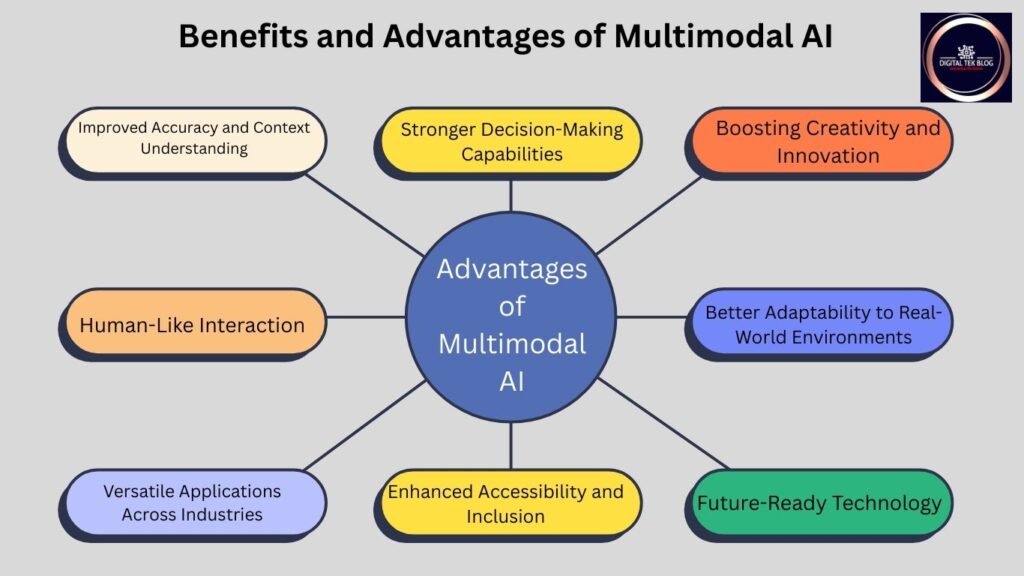

Benefits and Advantages of Multimodal AI

Artificial Intelligence is moving beyond traditional single-task models into more advanced systems that can understand the world in a deeper way. At the forefront of this progress is Multimodal AI, a technology that can interpret and connect information from multiple sources—such as text, images, audio, and video—all at once. This ability makes multimodal AI more powerful, flexible, and closer to human intelligence. Let’s explore the key benefits and advantages that make it so transformative.

1. Improved Accuracy and Context Understanding

One of the greatest strengths of multimodal AI is its ability to combine data sources, leading to more accurate results. A text-only AI may misunderstand a query if the language is vague, but when paired with visual or audio input, the AI gains additional context. For example, in healthcare, analyzing both a medical scan and the patient’s history provides a more precise diagnosis than relying on one data type alone. By merging multiple perspectives, multimodal AI reduces errors and delivers more reliable outputs.

2. Human-Like Interaction

Humans naturally process information through several senses—listening, seeing, reading, and even gestures. Multimodal AI mirrors this by integrating different input forms, making human-computer interaction smoother and more natural. For instance, you could ask a virtual assistant a spoken question while pointing your camera at an object. The AI would process your speech, recognize the object visually, and provide a complete, accurate answer. This multi-sensory approach makes digital communication more intuitive and user-friendly.

3. Versatile Applications Across Industries

Multimodal AI unlocks possibilities across a wide range of industries.

- Healthcare: Combines lab reports, medical images, and patient notes for better diagnostics.

- Education: Enhances learning by integrating text, visuals, and interactive audio tools.

- Customer Support: Understands both written queries and spoken language, improving response quality.

- Autonomous Vehicles: Uses inputs from cameras, sensors, and maps for safer navigation.

- Creative Fields: Generates music, video captions, or art by blending different input types.

This versatility makes multimodal AI a universal solution for problems that require richer context and deeper insights.

4. Stronger Decision-Making Capabilities

Single-modality systems can struggle when data is incomplete or unclear. Multimodal AI addresses this by drawing on multiple sources, which makes decision-making more robust and informed. For example, in finance, analyzing text-based news reports along with real-time market graphs provides a clearer picture for investment strategies. In self-driving cars, integrating data from radar, LiDAR, and cameras allows safer and quicker decisions in unpredictable conditions.

5. Enhanced Accessibility and Inclusion

Multimodal AI is also a step forward in accessibility. By combining text, speech, and visuals, it can better support people with disabilities. For instance, visually impaired individuals can benefit from AI that describes images aloud, while those with hearing difficulties can rely on real-time captions and contextual text analysis. This makes digital technology more inclusive, ensuring that more people can interact effectively with AI-driven systems.

6. Boosting Creativity and Innovation

Another exciting advantage is how multimodal AI encourages creativity. By combining text prompts, images, and audio, it enables the creation of new forms of digital content. Artists, writers, and designers are already using multimodal tools to generate ideas, produce artwork, or even compose music. The fusion of different data types allows for experiences that were once limited to human imagination—such as interactive learning platforms, AI-generated films, or personalized multimedia content.

7. Better Adaptability to Real-World Environments

The real world is complex, filled with overlapping signals and diverse information streams. Multimodal AI is naturally better suited for this environment because it can handle multiple modalities at once. Whether it’s interpreting a traffic situation, analyzing multimedia social media posts, or monitoring factory equipment through images and sensor data, multimodal AI adapts seamlessly to real-world challenges.

8. Future-Ready Technology

Finally, the biggest advantage of multimodal AI is its future potential. As AI becomes more integrated into daily life, systems will need to understand the world in the same way humans do—through combined senses and contextual reasoning. Multimodal AI lays the foundation for this future by creating smarter, more flexible, and more intuitive systems that can grow with advancing technology.

Challenges and Solutions in Multimodal AI

Multimodal AI has become one of the most powerful innovations in artificial intelligence, enabling systems to understand and process different types of data such as text, images, audio, and video together. While its potential is remarkable, developing and deploying multimodal systems is not without challenges. Let’s explore the key issues and the solutions that researchers and businesses are adopting to overcome them.

1. Data Integration and Alignment

One of the biggest hurdles in multimodal AI is aligning information from different sources. For example, connecting spoken words to the correct object in an image or matching video frames with corresponding text can be complex. Data from different modalities often have different structures, formats, and timelines.

Solution: Advanced alignment techniques, such as attention mechanisms and transformer-based architectures, help synchronize multiple inputs. Pretrained foundation models are also improving the ability to link data types accurately, making integration smoother.

2. Data Scarcity and Quality Issues

High-quality multimodal datasets are limited. Unlike text datasets, which are widely available, multimodal data (image + text, video + audio, etc.) is harder to collect, label, and maintain. Poor-quality or biased data can reduce model accuracy and fairness.

Solution: Researchers are building larger multimodal benchmarks and relying on self-supervised learning, where models learn from raw, unlabeled data. Synthetic data generation also helps fill the gaps by creating realistic multimodal training samples.

3. Computational Complexity

Processing multiple data types at the same time requires heavy computational power, large storage, and high energy consumption. This makes training multimodal AI models costly and less accessible to smaller organizations.

Solution: Model optimization techniques such as knowledge distillation, parameter sharing, and efficient fusion methods are reducing resource requirements. Cloud-based AI platforms also make high-performance computing more affordable and scalable.

4. Real-Time Processing Challenges

Applications like autonomous driving or virtual assistants require real-time responses. Delays in processing multimodal inputs can cause critical errors or poor user experiences.

Solution: Researchers are working on lightweight multimodal models designed for faster inference. Edge AI is also gaining traction, where computation happens locally on devices instead of relying only on cloud servers, improving speed and reducing latency.

5. Ethical and Privacy Concerns

Multimodal AI often handles sensitive information, such as facial data, voice recordings, or medical records. Without proper safeguards, it raises privacy risks and potential misuse.

Solution: Stronger data governance frameworks, differential privacy techniques, and ethical AI guidelines are being implemented to ensure responsible use. Transparency in how data is collected and used also builds trust with users.

Conclusion – Why Multimodal AI Matters

In the fast-paced world of technology, Multimodal AI is more than just an innovation—it is a transformational leap in how machines interact with data and humans. Traditional AI models are powerful but limited; they typically process only one type of input, such as text or images. Multimodal AI breaks through that limitation by bringing together multiple forms of information—text, visuals, sound, video, and even sensor data—into a unified system. This ability makes AI not just smarter, but also more practical, adaptable, and human-like in its understanding.

The importance of multimodal AI lies in its context awareness. For example, if you ask a question about a photo while speaking, a traditional AI might only respond to the text or voice. A multimodal system, however, analyzes your voice tone, the actual words you used, and the image itself, merging all three to deliver a far richer response. This context-driven intelligence is what makes multimodal AI stand out from older models.

From a real-world perspective, multimodal AI matters because it is already reshaping industries. In healthcare, it helps doctors by combining medical imaging with patient records to create more accurate diagnoses. In customer service, it allows virtual assistants to process speech, text, and facial expressions simultaneously, improving the quality of support. Creative industries, education platforms, and even autonomous vehicles benefit from its ability to integrate and interpret different data streams at once.

Another reason multimodal AI is significant is its impact on human-computer interaction. For decades, people have had to adapt to computers, often using rigid commands or text-based queries. Multimodal AI flips that relationship—it adapts to us. Whether we type, talk, gesture, or show an image, the AI can understand. This shift makes technology more inclusive, natural, and accessible for everyone, regardless of their technical background.

Looking toward the future, multimodal AI matters because it represents the next phase of artificial intelligence evolution. By combining multiple modalities, these systems reduce errors, make smarter decisions, and open the door to new applications that were once science fiction. Imagine AI tutors that can read a student’s expressions, listen to their questions, and adjust explanations in real time. Or personal assistants that can interpret both your words and your environment to offer meaningful help.

In short, multimodal AI is not just an improvement in artificial intelligence—it is a foundation for the future of intelligent systems. It bridges the gap between human perception and machine processing, making AI more powerful, relatable, and useful in everyday life. This is why multimodal AI truly matters.

FAQs on Multimodal AI

1. What is Multimodal AI?

Multimodal AI is a type of artificial intelligence that can process and understand information from multiple sources—such as text, images, audio, video, and sensor data. Unlike traditional AI, which usually works with one input type, multimodal AI combines different modalities to deliver more accurate and human-like responses.

2. How does Multimodal AI work?

Multimodal AI works by first processing each type of data separately using specialized models—for example, images through computer vision, text through natural language processing, and audio through speech recognition. Then, it fuses the extracted features from all these sources into a unified system. This fusion allows the AI to understand context more effectively and provide intelligent outputs.

3. What are the key applications of Multimodal AI?

Multimodal AI is used in many fields:

- Healthcare – combining medical images and patient history for better diagnosis.

- Customer service – virtual assistants that understand speech, text, and facial cues.

- Autonomous vehicles – integrating camera, radar, and sensor data for safe navigation.

- Education – AI tutors that respond to both spoken and written queries.

- Creative industries – generating captions, videos, and music using multiple inputs.

4. Why is Multimodal AI important?

It is important because it brings AI closer to human-like intelligence. Humans rarely rely on one type of information—we combine what we see, hear, and read. Multimodal AI mirrors this ability, which makes it more accurate, reliable, and useful in solving real-world problems.

5. What are the benefits of using Multimodal AI?

The main benefits include:

- Better accuracy by combining diverse data inputs.

- Context-aware responses that reduce errors.

- Natural interaction through voice, text, and visual understanding.

- Cross-industry innovation, enabling smarter healthcare, education, customer service, and more.

6. What challenges does Multimodal AI face?

Despite its advantages, multimodal AI faces challenges such as:

- High computational costs.

- Difficulty in aligning and synchronizing different data types.

- Handling missing or noisy data.

- Privacy and ethical issues when sensitive multimodal data is used.

7. What is the future of Multimodal AI?

The future of multimodal AI looks promising. As models become faster and more efficient, they will be widely adopted across industries. In the coming years, we may see AI systems that act as true assistants—understanding speech, gestures, and visuals together, making human-computer interaction seamless and more natural.