Artificial Neural Network

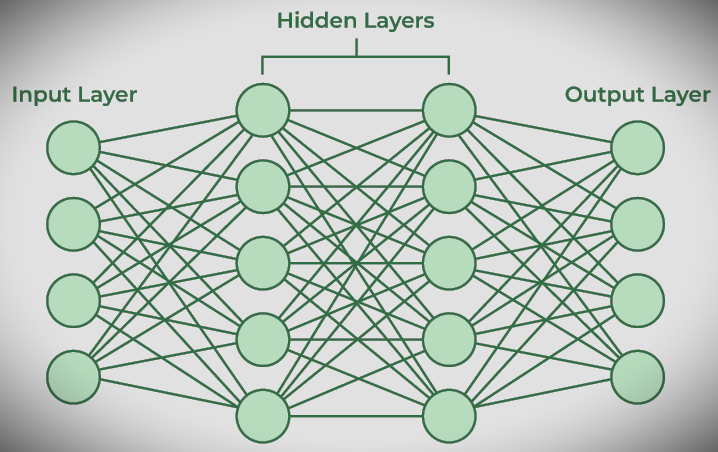

Artificial neural networks consist of artificial neurons called units. These units are arranged in a series of layers and form the entire artificial neural network in the system. A layer may contain only tens or millions of units, depending on how complex the neural network is required to learn the patterns hidden in the dataset. Artificial neural networks generally consist of an input layer, an output layer, and a hidden layer. The input layer receives data from outside that the neural network needs to analyze or learn. This data is then passed through one or more hidden layers that transform the input into valuable data for the output layer. Finally, the output layer provides output in the form of the artificial neural network’s response to the provided input data.

In most neural networks, units are interconnected from one layer to the next. Each of these connections has a weight that determines the impact of one entity on the other. As data is transferred from one unit to another, the neural network learns more about the data, which ultimately results in the output from the output layer.

The structure and behavior of human neurons serve as the basis for artificial neural networks. It is also called a neural network or neural network. The input layer of an artificial neural network is the first layer, which receives input from an external source and releases it to the second layer, the hidden layer. In the hidden layer, each neuron receives input from the neurons of the previous layer, calculates a weighted sum, and sends it to the neurons in the next layer. The fact that these connections are weighted means that by assigning different weights to each input, the influence of inputs from previous layers is optimized to a greater or lesser extent, and the performance of the model is improved. These loads can be optimized. This means that it will be adjusted during the training process.

Neural Networks

A neural network is a method that teaches computers to process data in a way inspired by the human brain in artificial intelligence.

In another way, we can say that a neural network is a computer program that which that decides similarly to the human brain.

Every Neural network has combined layers of nodes, an input layer one or more hidden layers, and an output layer. Every node connects and has its weight and parameter. If the value of any output individual node is equal to the specified parameter then that output node will be activated and send data to the next layer otherwise no data will be passed to the next layer of the network.

Neural networks rely on training data to learn and it improves their accuracy over time. Once they find the best accuracy then this node becomes a powerful tool in artificial intelligence and computer science.

Sometimes Neural networks are also called artificial neural networks (ANNs) or simulated neural networks (SNNs). A neural network is a subset of machine learning and the heart of deep learning models.

Biological Neural Networks

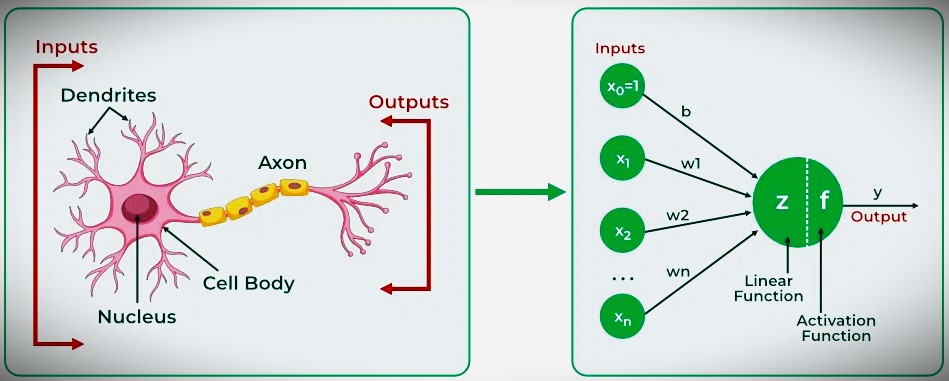

The concept of artificial neural networks comes from biological neurons found in animal brains So they share a lot of similarities in structure and function.

Artificial Neural Networks concept in terms of biological neural networks which are found in animal brains. So they found many similarities in structure and function.

- Structure: The structure of artificial neural networks is inspired by biological neurons. Biological neurons consist of a cell body or body that processes impulses, dendrites that receive impulses, and axons that transmit impulses to other neurons. The input nodes of artificial neural networks receive input signals, the hidden layer nodes compute these input signals, and the output layer nodes compute the final output by processing the results of the hidden layers using activation functions.

| Biological Neuron | Artificial Neuron |

|---|---|

| Dendrite | Inputs |

| Cell nucleus or Soma | Nodes |

| Synapses | Weights |

| Axon | Output |

- Synapses: Synapses are biological links between neurons that allow the transmission of impulses from the dendrites to the cell body. Synapses are the weights that connect nodes in one layer to nodes in the next layer in an artificial neuron. The strength of a link is determined by its weight value.

- Learning: In biological neurons, learning occurs in the nucleus or in the cell body. The cell body contains a nucleus that helps process impulses. If the impulse is strong enough to reach a threshold, an action potential is generated and travels down the axon. This is made possible by synaptic plasticity, which describes the ability of synapses to become stronger or weaker over time in response to changes in activity. In artificial neural networks, backpropagation is a technique used for learning that adjusts weights between nodes based on the error or difference between the predicted and actual results.

| Biological Neuron | Artificial Neuron |

| Synaptic plasticity | Backpropagations |

Activation: In biological neurons, activation is the firing rate of the neuron that occurs when an impulse is strong enough to reach a threshold. In an artificial neural network, a mathematical function known as an activation function maps inputs to outputs and performs activation.

How do artificial neural networks work?

Artificial neural networks typically involve multiple processors working in parallel and organized into hierarchies or layers. The first layer is similar to the optic nerve in human visual processing and receives raw input information. Each successive layer receives the output from the previous layer instead of the raw input. This is similar to how neurons distant from the optic nerve receive signals from neurons closer to the optic nerve. The last layer produces the output of the system.

Each processing node has a small area of knowledge, such as what it sees and the rules with which it was originally programmed or developed itself. These layers are highly interconnected. That is, each node in layer N is connected to layer N-1 (its input) and there are many nodes in layer N+1 that provide input data to these nodes. The output layer can contain one or more nodes from which you can read the generated responses.

Artificial neural networks are known for their adaptability. That is, the artificial neural network modifies itself as it learns from initial training and as it subsequently learns more information about the world. The most basic learning models focus on valuing the input stream. This allows each node to measure the importance of input data from its predecessor nodes. More importance is given to inputs that contribute to obtaining the correct answer.

Applications of artificial neural networks

- Social Media: Artificial neural networks are heavily used in social media. For example, consider Facebook’s People You May Know feature. This feature suggests people you know in real life and allows you to send them friend requests. This magical effect is powered by an artificial neural system that calculates the people you potentially know by analyzing your profile, your interests, your current friends, their friends, and many other factors. This is achieved using a network. Another common use of machine learning in social media is facial recognition. This is done by finding about 100 reference points on a person’s face and matching them with reference points already available in the database using a convolutional neural network.

- Marketing and Sales: When you log in to an e-commerce site like Amazon or Flipkart, it recommends products to buy based on your previous browsing history. Similarly, if you like pasta, companies like Zomato and Swiggy will recommend restaurants based on your preferences and past order history. This is true for all new-age marketing areas, such as book sites, movie services, hospitality sites, etc. and is achieved by implementing personalized marketing. It uses artificial neural networks to identify customers’ likes, dislikes, past purchase histories, and more and adjust marketing campaigns accordingly.

- Healthcare: Artificial neural networks are used in oncology to train algorithms that can identify cancerous tissue at the microscopic level with the same accuracy as trained physicians. Various rare diseases can manifest as physical characteristics and can be identified at an early stage using facial analysis of patient photographs. Therefore, the full-scale introduction of artificial neural networks into the health care environment will only improve the diagnostic capabilities of health care professionals, ultimately leading to an overall improvement in the quality of health care worldwide.

- Personal Assistants: You’ve probably heard of Siri, Alexa, Cortana, and others. I am sure you have also heard about them depending on your mobile phone. These are personal assistants and are examples of speech recognition that use natural language processing to interact with the user and generate responses accordingly. Natural language processing uses artificial neural networks built to handle many tasks for personal assistants, such as language syntax, semantics, correct speech, managing ongoing conversations, and more.

Image recognition was one of the first areas in which neural networks were successfully applied. But the technology’s uses have expanded to many more areas:

- Chatbots.

- NLP, translation, and language generation.

- Stock market predictions.

- Delivery driver route planning and optimization.

- Drug discovery and development.

- Social media.

- Personal assistants.

Prime usage involves processes that operate according to strict rules or patterns and involve large amounts of data. If the data involved is too large for humans to understand in a reasonable time, the process may be a prime candidate for automation with artificial neural networks.

How do neural networks learn?

Typically, ANNs are trained first or given a large amount of data. Training involves providing input and telling the network what the output should be. For example, if you want to build a network to identify actor faces, the initial training might consist of a set of photos containing the faces of actors, non-actors, masks, statues, and animals. Each input has matching identifying information, such as actor name, “not actor” or “not human” information. Providing answers allows the model to adjust its internal weights to do its job better.

For example, if nodes David, Diane, and Dakota tell node Ernie that the current input image is a photo of Brad Pitt, but node Durango says it is George Clooney, and the training program confirms Pitt, Ernie Durango’s input. Reduces the weight given to . And give more importance to David, Dianne and Dakota.

Neural networks use several principles when defining rules and making decisions (each node deciding what to send to the next layer based on input from the previous layer). These include gradient-based training, fuzzy logic, genetic algorithms, and Bayesian techniques. Some basic rules can be given about the relationships of objects in the data being modeled.

For example, a facial recognition system may be told, “Your eyebrows are above your eyes,” or “Your mustache is below your nose. Your mustache is above and/or on the sides of your mouth.” Preloading rules speeds up training and makes your model more powerful. But it also includes assumptions about the nature of the problem that may prove irrelevant and unhelpful, or incorrect and counterproductive, and determine whether significant rules should be included.

Additionally, neural networks increase cultural biases due to the assumptions people make when training the algorithms. Biased datasets are an ongoing challenge for training systems that find answers on their own through pattern recognition in the data. When the data given to an algorithm is not neutral and there is little neutral data, the machine propagates bias.

Types of neural networks

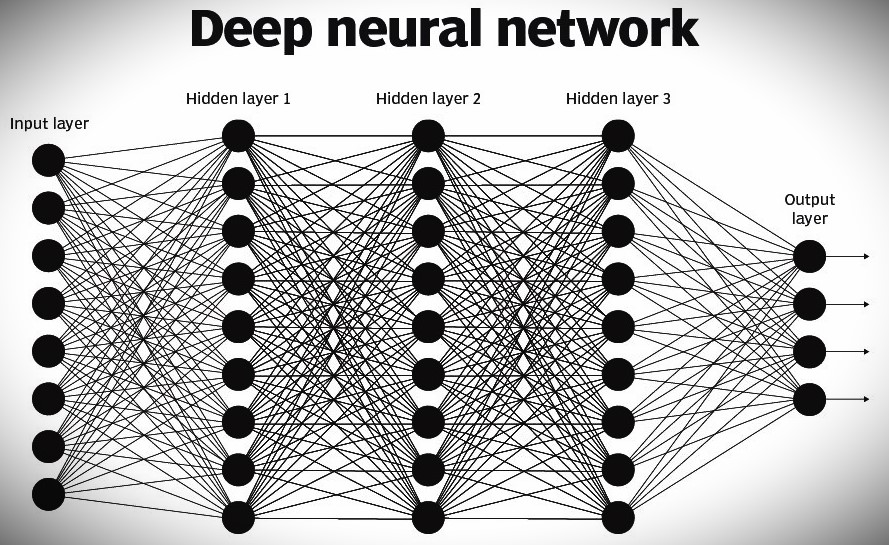

Neural networks are sometimes described in terms of depth, such as how many layers there are between the input and output, or the so-called hidden layers of the model. This is why the term neural network is used almost interchangeably with deep learning. They can also be described by the number of hidden nodes present in the model or the number of input and output layers present in each node. Variations in classic neural network design allow different forms of back-and-forth propagation of information between layers.

- Specific types of artificial neural networks include the following:

- feedforward neural network

- One of the simplest variants of a neural network, it sends information in one direction through various input nodes until it reaches an output node. A network may or may not have a layer of hidden nodes, making its functionality easier to interpret. Ready to handle large amounts of noise.

- This type of ANN computational model is used in technologies such as facial recognition and computer vision.

- Recurrent Neural Network (RNN)

- More complex RNNs store the outputs of processing nodes and feed the results back into the model. This way the model learns how to predict the results of the layer. Each node in the RNN model acts as a memory cell and continues to perform calculations and operations.

- This neural network starts with front propagation, similar to a feedforward network, and then remembers all the processed information for future reuse. If the network’s predictions are incorrect, the system will learn itself and continue working toward the correct prediction during backpropagation.

- This type of ANN is often used in text-to-speech conversion.

- Convolutional Neural Network (CNN)

- CNN is one of the most popular models in use today. This computational model uses a variety of multilayer perceptrons and consists of one or more convolutional layers that can be fully connected or pooled. These convolutional layers form feature maps that record areas of the image that are eventually divided into rectangles and sent for nonlinear processing.

- CNN models are particularly popular in the field of image recognition. It is used in many of the most cutting-edge applications of AI, including facial recognition, text digitization, and NLP. Other use cases include paraphrase detection, signal processing, and image classification.

- Deconvolutional Neural Network

- Deconvolutional neural networks use the inverse CNN model process. They attempt to find missing features or signals that were originally thought to be unimportant to the function of the CNN system. This network model can be used for image synthesis and analysis.

- modular neural network

- They contain multiple neural networks that work independently of each other. The networks do not communicate with or interfere with each other during the calculation process. As a result, complex or large-scale computational processes can be executed more efficiently.

- • Radial Basis Function Neural Network: Radial basis function is a function that takes into account the distance of a point with respect to its center. The RBF function has two layers. In the first layer, the input is mapped to all radial basis functions in the hidden layer, and the output layer calculates the output in the next step. Radial basis function nets are commonly used to model data that represent underlying trends or functions.

Advantages of artificial neural networks

Artificial neural networks have the following advantages:

- Parallel processing capability. The parallel processing power of ANNs allows the network to perform multiple tasks simultaneously.

- Information storage. ANNs store information across the entire network, not just in the database. This ensures that the entire network remains operational even if a small amount of data is lost from one location.

- Nonlinearity. The ability to learn and model non-linear, complex relationships helps model real-world relationships between inputs and outputs.

- fault tolerance. ANNs are fault-tolerant, meaning that the corruption or failure of one or more cells in an ANN will not prevent it from producing output.

- Gradual decay. This means that your network degrades gradually over time, rather than immediately when a problem occurs.

- Unlimited input variables. There are no restrictions on the input variables, such as how they are distributed.

- Observation-based decision making. Machine learning means that ANNs can learn from events and make decisions based on observations.

- Unorganized data processing. Artificial neural networks are very good at processing, sorting, classifying, and organizing large amounts of data.

- Ability to learn hidden relationships. ANNs can learn hidden relationships in data without being guided by fixed relationships. This means that ANNs can better model unstable data and non-stationary variance.

- Ability to normalize data. The ability to generalize and infer unseen relationships in unseen data means that ANNs can predict the output of unseen data.

Disadvantages of artificial neural networks

While neural networks have many advantages, they also have some disadvantages:

- Lack of rules. The lack of rules for determining the appropriate network structure means that appropriate artificial neural network architectures can only be found through trial and error and experience.

- Hardware dependencies. Neural networks are hardware dependent, as they require processors with parallel processing power.

- Numerical conversion. Networks process numerical information. This means that all problems must be converted to numbers before they are presented to the ANN.

- Lack of trust. The lack of explanation behind the detection solution is one of the biggest shortcomings of ANNs. Failure to explain the why and how behind a solution creates a lack of trust in the network.

- Wrong result. If not trained properly, ANNs can produce incomplete or inaccurate results.

- Nature of black box. Because AI models are black boxes, it can be difficult to understand how neural networks make predictions and classify data.

History and timeline of neural networks

Neural networks have existed for decades and have made significant progress. Below, we discuss important milestones and developments in the history of neural networks.

- 1940s. In 1943, mathematicians Warren McCulloch and Walter Pitts created a circuit system to execute a simple algorithm intended to approximate the functioning of the human brain.

- 1950s. In 1958, American psychologist Frank Rosenblatt, considered the father of deep learning, created the perceptron, a type of artificial neural network that can learn and make decisions by changing weights. The perceptron consists of one layer of computing units and can process linearly discretized problems.

- 1970s. American scientist Paul Verbos developed the backpropagation method, which facilitates the training of multilayer neural networks. Deep learning is now possible by allowing weights to be adjusted across the network based on the error calculated in the output layer.

- 1980s. Cognitive psychologist and computer scientist Jeffrey Hinton began investigating the concept of connectionism with computer scientist Yann LeCun and a group of fellow researchers. Connectionism emphasizes the idea that cognitive processes emerge through interconnected networks of simple processing units. This period paved the way for modern neural networks and deep learning.

- 1990s. Jürgen Schmidhuber and Sepp Hochreiter, both computer scientists from Germany, proposed the long short-term memory recurrent neural network framework in 1997.

- 2000s. Jeffrey Hinton and his colleagues developed the RBM, a type of generative artificial neural network that enables unsupervised learning. RBM paved the way for deep belief networks and deep learning algorithms.

Research into neural networks did not accelerate until around 2010. The trend of big data, where companies collect large amounts of data and parallel computing, has given data scientists the training data and computing resources needed to run complex artificial neural networks. In 2012, a neural network called AlexNet won the ImageNet Large Scale Visual Recognition Competition, an image classification challenge. Since then, interest in artificial neural networks has grown and the technology continues to advance.

Generative Adversarial Network and Transformer are two independent machine learning algorithms. Learn how both methods differ from each other and how you can use them to deliver better results to your users in the future.

follow me : Twitter, Facebook, LinkedIn, Instagram

5 thoughts on “Neural Networks Explained: Ultimate Beginner’s Guide 2025”

Comments are closed.