Reinforcement learning enables a computer agent to learn behaviors based on the feedback received for its past actions.

Reinforcement learning (RL) in artificial intelligence allows AI-based systems to take actions in dynamic environments through a trial-and-error method, maximizing collective reward based on the feedback generated for each action. It is defined as a subfield of machine learning. This article describes reinforcement learning, how it works, algorithms, and practical applications.

What is reinforcement learning in artificial intelligence?

Reinforcement learning optimizes AI-powered systems by mimicking natural intelligence that simulates human cognition. Such learning approaches help computer agents make critical decisions and achieve remarkable results in their intended tasks without the need for human involvement or explicit programming of the AI system.

Known RL techniques that add micro-dynamics to traditional machine learning techniques include Monte Carlo, State-Action-Reward-State-Action (SARSA), and Q-learning. AI models trained with reinforcement learning algorithms have defeated human models in several video and board games, including chess and Go.

Technically, reinforcement learning implementations can be classified into three types:

- Policy-based: This RL approach aims to maximize the return of the system by employing deterministic policies, strategies, and techniques.

- Value-based: Value-based RL implementations aim to optimize any value function involved in learning.

- Model-based: A model-based approach allows you to create virtual configurations for specific environments. Additionally, participating system agents operate within these virtual specifications.

A typical reinforcement learning model can be represented by:

reinforcement learning model

A computer may be depicted as an agent in a specific state (St) in the above diagram. It acts in its surroundings to accomplish a particular objective. The agent receives feedback as a reward or penalty (R) for completing the task.

Benefits of reinforcement learning

Reinforcement learning solves some complex problems that traditional machine-learning algorithms cannot address. RL is known for its ability to act autonomously by exploring all possibilities and paths, giving it similarity to artificial general intelligence (AGI).

The key benefits of reinforcement learning are:

- Focus on long-term goals: Common ML algorithms divide the problem into sub-problems and address them individually without considering the main problem. However, RL focuses on achieving long-term goals without breaking the problem into sub-tasks, thereby maximizing rewards.

- Easy data collection process: RL does not require a separate data collection process. As the agent operates in the environment, training data is collected dynamically through the agent’s reactions and experiences.

- Work in an evolving and uncertain environment: RL techniques are built on an adaptive framework where agents learn with experience as they continue to interact with the environment. Additionally, as environmental constraints change, RL algorithms improve and adapt themselves to improve performance.

How does reinforcement learning work?

The working principle of reinforcement learning is based on the reward function. Let us understand the RL mechanism using an example.

- Suppose you are trying to teach your pet (a dog) a certain trick.

- Since your pet cannot understand our language, you will have to adopt a different strategy.

- Design situations in which your pet performs specific tasks and provides rewards (such as treats) for your pet.

- Now, every time your pet encounters the same situation, he will be more eager to perform the same behavior for which he previously received a reward.

- This allows your pet to “learn” from the rewarding experience, know “what to do” when a particular situation arises, and repeat that behavior.

- Similarly, pets will also become aware of what not to do when faced with certain situations.

Use case

In the above case,

- Pets (dogs) act as agents that move through the environment, the home. Position here refers to the position in which the dog is sitting, and when you say certain words, it changes to the position in which it walks.

- The transition from sitting to walking occurs when the agent reacts to your words while in the environment. The policies here allow agents to take action in certain states and expect better results.

- When your pet moves to the second stage (walking), you will get a reward (dog food).

RL stepwise workflow

When training the agent using the reinforcement learning workflow, the following important elements are taken into account:.

- Environment

- Reward

- Agent

- Training

- Deployment

Let’s understand each one in detail.

Step I: Define or create the environment

The RL process begins by defining the environment in which the agent operates. Environment can refer to a real physical system or a simulated environment. Once you’ve determined your environment, you can start experimenting with your RL process.

Step II: Specify the reward

The next step is to define the reward for the agent. It serves as a performance indicator for the agent, allowing the agent to evaluate the quality of its actions against its goals. Additionally, providing the appropriate reward to the agent may require several iterations before determining the appropriate reward for a particular action.

Step III: Define the agent

Once the environment and rewards are finalized, an agent can be created that specifies relevant policies, such as RL training algorithms. This process may include the following steps:

- Express the policy using a suitable neural network or lookup table.

- Choose an appropriate RL training algorithm

Step IV: Train or validate the agent

Train and validate your agents to improve your training policies. We also continue the training process by focusing on the reward structure and RL design policy architecture. RL training takes time, ranging from minutes to days, depending on the end user. Therefore, for a complex set of applications, faster training is achieved by using system architectures where multiple CPUs, GPUs, and computing systems run in parallel.

Step V: Implement the policy

Policies in RL-enabled systems serve as decision-making components that are deployed using C, C++, or CUDA development code.

When implementing these policies, it may be important to revisit the early stages of the RL workflow in situations where optimal decisions and outcomes are not achieved.

The agent may need to be retrained after making adjustments to the following factors:

- RL algorithm configuration

- Reward definition

- Action / state signal detection

- Environmental variables

- Training structure

- Policy framework

Reinforcement Learning Algorithms RL algorithms are basically divided into two types: model-based algorithms and model-free algorithms. These can be further divided into on-policy and off-policy types.

Model-based algorithms consist of a predefined RL model that learns from the current state, actions, and state changes caused by the actions. Therefore, these types store state and action data for future reference. Model-free algorithms, on the other hand, work in a trial-and-error manner, eliminating the need to store state and action data in memory.

On-policy and off-policy algorithms can be better understood using the following mathematical notation:

The letter “S” represents the state, the letter “A” represents the action, and the symbol “π” represents the probability that determines the reward. The Q(s, a) function helps in the prediction process, providing future rewards to the agent by understanding and learning states, actions, and state changes.

Therefore, the on-policy uses the Q(s, a) function to learn from the current state and actions, while the off-policy focuses on learning [Q(s, a)] from random states and actions. have a guess.

Additionally, the Markov decision process emphasizes the current state, which helps predict the future state, rather than relying on past state information. This means that the probability of a future state depends more on the current state than on the process that caused the current state. Markov properties play an important role in reinforcement learning.

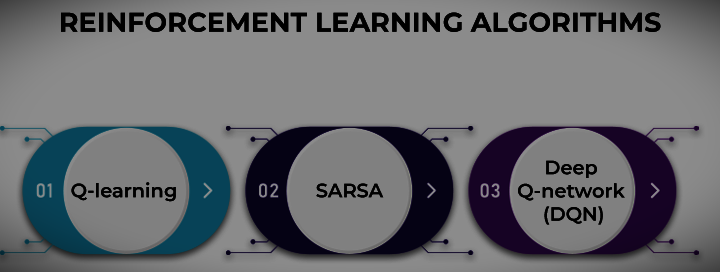

Let’s now dive into the vital RL algorithms:

1. Q-learning

Q-learning is an off-policy and model-free type of algorithm that learns from random actions (greedy policies). The “Q” in Q-learning refers to the quality of the activity that maximizes the reward generated through the algorithmic process.

Q-learning algorithms use a reward matrix to store rewards earned. For example, for a reward of 50, a reward matrix is constructed that assigns the value of position 50, indicating reward 50. These values are updated using methods such as policy iteration and value iteration.

Policy iteration refers to the improvement or refinement of the policy through actions that enhance its value function. Value iteration updates the value of the value function. Mathematically, Q-learning is expressed as:

Q(s,a) = (1-α).Q(s,a) + α.(R + γ.max(Q(S2,a)).

Where,

alpha = learning rate,

gamma = discount factor,

R = reward,

S2 = next state.

Q(S2,a) = future value.

2. SARSA

The State-Action-Reward-State-Action (SARSA) algorithm is an on-policy method. Therefore, it does not follow the greedy approach of Q-learning. Instead, SARSA learns from the current situation and takes actions to implement the RL process.

3. Deep Q-network (DQN)

Unlike Q-learning and SARSA, deep Q-networks use neural networks and do not rely on 2D arrays. Q-learning algorithms are unable to predict and update state values that they do not know about (usually unknown states).

Therefore, DQN replaces 2D arrays with neural networks to efficiently compute values representing state values and state changes, thereby accelerating the learning aspect of RL.

Related Topics on Artificial Intelligence:

- What is natural language processing?

- What are artificial neural networks? Types and Applications in AI

- Differences between Machine Learning and Artificial Intelligence: 4 types, Applications and examples

- Generative AI: What is it? 7 best information you require

- Machine Learning and Artificial Intelligence in Robotics

- Robotics and Artificial Intelligence

- What is conversational AI? 7 best features, Definition, and examples

- Big Exploration of Machine Learning: Exclusive Definition, Types, and Applications in 2024

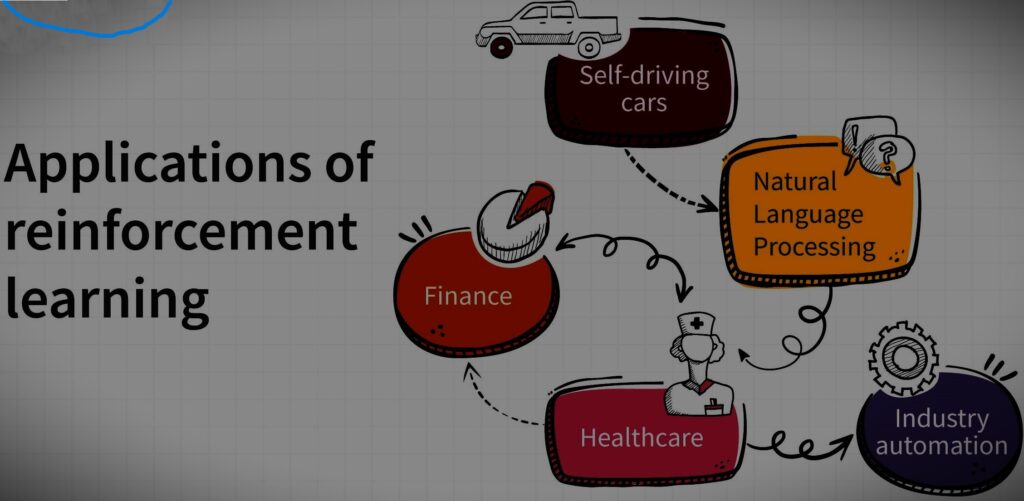

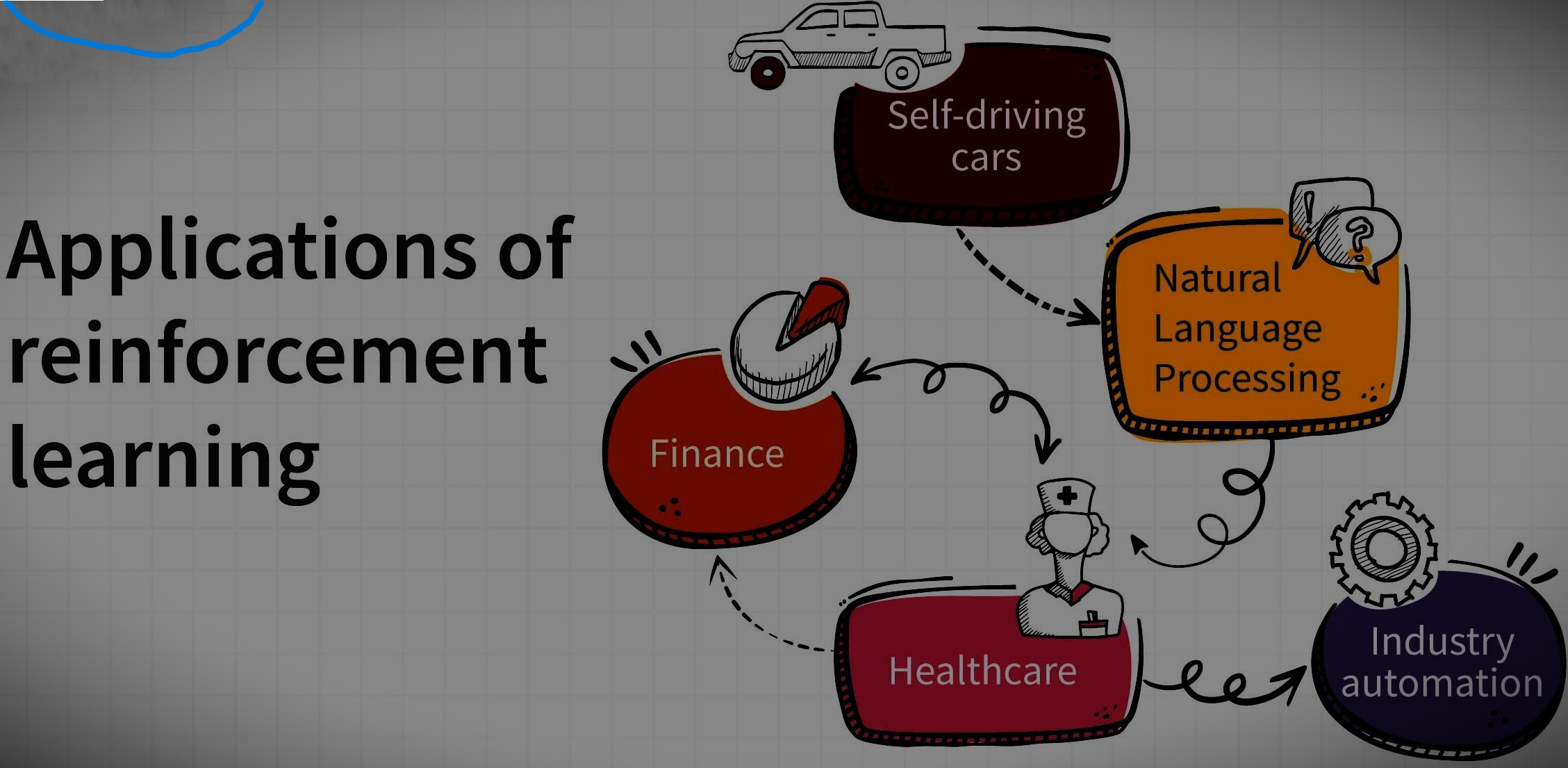

Uses of Reinforcement Learning in artificial intelligence

Reinforcement learning is designed to maximize the reward earned by an agent when completing a specific task. RL is beneficial for many real-life scenarios and applications, such as self-driving cars, robotics, surgeons, and even AI bots.

Listed here are important applications of reinforcement learning in our daily lives that are shaping the field of AI.

1. Managing self-driving cars

For vehicles to operate autonomously in urban environments, they need adequate support from ML models that simulate all the scenarios and scenes the vehicle encounters. RL is useful when these models are trained in a dynamic environment, where all possible paths are studied and classified through the learning process.

Learning from experience makes RL the best choice for self-driving cars that need to make optimal decisions quickly. Many variables, such as driving zone management, traffic handling, vehicle speed monitoring and accident control, are well controlled through RL techniques.

A team of researchers at MIT has developed a simulation they have named “Deep Traffic” for autonomous units like drones and cars. This project is an open-source environment for developing algorithms that combine constraints from RL, deep learning, and computer vision.

2. Addressing the energy consumption problem

Rapid advances in AI development today have enabled governments to address critical issues such as energy consumption. Additionally, with the increasing number of IoT devices and commercial, industrial and enterprise systems, servers are constantly under threat.

As reinforcement learning algorithms have become more popular, it has turned out that RL agents can control physical parameters around the server without prior knowledge of the server’s state. This data is obtained through multiple sensors that collect temperature, power, and other data to help train deep neural networks, thereby contributing to cooling the data center and regulating energy consumption. Typically, Q-learning network (DQN) algorithms are used in such cases.

3. Traffic signal control

Urbanization and the increasing demand for automobiles in big cities have raised concerns of authorities struggling to manage traffic congestion in urban environments. The solution to this problem is reinforcement learning. The RL model initiates traffic light control based on the traffic conditions within the area.

This means that the model considers traffic from multiple directions and learns, adapts, and adjusts to traffic lights in urban transportation networks.

4. Healthcare

RL plays an important role in the medical field, as dynamic treatment planning (DTR) has helped medical professionals manage the health of patients. DTR uses a series of decisions to reach a final solution. This series of processes may include the following steps:

- Check the patient’s survival status.

- deciding on the type of treatment.

- Find the appropriate dosage based on the patient’s condition

- Set administration time, etc.

This series of decisions allows doctors to improve treatment strategies and diagnose complex diseases such as mental fatigue, diabetes, and cancer. Furthermore, DTR helps in providing timely treatment without the complications caused by delayed treatment. Action.

5. Robotics

Robotics is the field of training robots to mimic human behavior while performing tasks. But today’s robots do not appear to have the moral, social, or common sense to accomplish their goals. In such cases, AI subfields like deep learning and RL can be mixed (deep reinforcement learning) to achieve better results.

Deep RL is important for robots that assist in warehouse navigation while supplying required product parts, product packaging, product assembly, defect inspection, etc. For example, deep RL models are trained on multimodal data, which is important for identifying missing parts, cracks, and scratches. , or total damage to machinery in a warehouse by scanning images containing billions of data points.

Additionally, Deep RL also helps with inventory management, as agents are trained to identify empty containers and fill them immediately.

6. Marketing

RL helps organizations streamline business strategies to maximize customer growth and achieve long-term goals. In the field of marketing, RL helps to provide personalized recommendations to users by predicting their preferences, reactions, and behavior towards specific products and services.

Bots trained in RL also consider variables such as the evolution of customer mindset and dynamically learn the user’s changing needs based on user behavior. This allows companies to provide targeted, high-quality recommendations with maximum profit margins.

7. Gaming

Reinforcement learning agents continue to apply logic through experience, learning, and adaptation to the game environment to execute a series of steps to achieve the desired outcome.

For example, AlphaGo, built by Google’s DeepMind, outperformed Go Master in October 2015. This was a major advance for AI models at the time. RL agents are used to design games like AlphaGo that use deep neural networks, as well as to test games and find bugs within the game environment. RL executes multiple iterations without external intervention, making it easier to identify potential bugs. For example, gaming companies like Ubisoft use RL to detect bugs.

Takeaway

Reinforcement learning automates the decision-making and learning processes. RL agents are known to learn from their environment and experience without relying on direct observation or human intervention.

Reinforcement learning is an important subset of AI and ML. It can also help develop autonomous robots, drones, and even simulators as it simulates human-like learning processes to understand their surroundings.

Advantages of reinforcement learning

There are so many benefits to reinforcement learning that it is the future of machine learning. But why? There are many situations in which data cannot be labeled effectively.

Because it must learn from rewards and supervised learning relies on large labeled datasets, reinforcement learning has much broader applicability than other forms of supervised learning. The benefits of reinforcement learning are:

1. Datasets

Reinforcement learning does not require large labeled datasets. This is a huge advantage because, as the amount of data in the world grows, the cost of labeling it for all the necessary applications becomes exponentially higher.

2. Innovation

This is revolutionary because, unlike reinforcement learning, supervised learning actually simulates the person who provided data to its algorithm.

An algorithm can learn to perform a task as well or better than a teacher, but it cannot learn entirely new approaches to solving problems.

On the other hand, reinforcement learning algorithms can bring completely new solutions that humans would never have thought of.

3. Goal-oriented

While goal-oriented reinforcement learning can be used for sequences of actions, supervised learning is primarily used in an input-output manner.

Reinforcement learning can be used for purposeful tasks such as robots playing football, self-driving cars reaching their destination, and algorithms that maximize return on investment for advertising dollars.

4. Adaptable

One of the benefits of reinforcement learning is its adaptability. Unlike supervised learning algorithms, reinforcement learning automatically adapts to new environments, eliminating the need for retraining.

What are reinforcement learning applications?

1. Self-driving cars

Various papers have proposed deep reinforcement learning for autonomous driving. With self-driving cars, there are many different aspects to consider, such as speed limits in different locations, driving areas, collision avoidance, etc.

Autonomous driving tasks to which reinforcement learning can be applied include trajectory optimization, motion planning, dynamic paths, controller optimization, and highway scenario-based learning policies.

2. Industry automation

- In industrial growth, learning-based robots are used to perform various tasks. Apart from the fact that these robots are more efficient than humans, they can also perform tasks that are dangerous for humans. The center can be completely controlled by the AI system without the need for human intervention. Of course, supervision by data center experts is always required.

The system works as follows:

- Takes a snapshot of data from the data center every 5 minutes and feeds it to the deep neural network.

- Next, predict how different combinations will affect future energy consumption.

- Identify actions to reduce power consumption while maintaining certain safety standards.

- Send these actions to the data center for implementation.

- Actions are verified by the local control system.

3. Finance

Supervised time series models can be used not only to predict stock prices, but also to predict future sales. However, these models do not decide what action to take at a particular stock price. Introduction to reinforcement learning (RL). RL agents can decide on such actions. Hold, buy, or sell. RL models are evaluated using market benchmark standards to ensure optimal performance.

4. Natural Language Processing

RL has several applications in NLP, including machine translation, question answering, and text summarization.

5. Healthcare

In health care, patients can receive treatment based on policies learned from RL systems. RL does not require any prior knowledge about the mathematical model of the biological system and can use prior experience to find optimal policies. This approach is easier to implement than other control-based systems in health care.

follow me : Twitter, Facebook, LinkedIn, Instagram

2 thoughts on “The Ultimate Guide to Reinforcement Learning in 2025 – Everything You Need”

Comments are closed.