5G and edge computing will open up a world of new revenue opportunities in manufacturing, gaming, entertainment, healthcare, retail and other sectors. How can communications service providers (CSPs) get ahead of their competitors? Find out everything you need to know below.

What is edge computing?

Edge computing, also known as mobile edge computing (MEC) or multi-access edge computing, is a distributed framework that brings an application’s processing and storage resources closer to where the data is produced or consumed. goes.

Edge solutions place computing power closer to users, devices or data sources, providing benefits such as low latency, high bandwidth, on-device processing and data offloading, thereby improving application and service performance, security and reducing operating costs and reliability. Improvement occurs.

Edge computing infrastructure is hosted by a variety of service providers. The “edge” where execution resources are typically provided is within or at the boundary of the access network. However, it can also be installed on a company’s premises, such as in a factory building or at home, or as a wireless router in vehicles such as trains, airplanes, ambulances, and private cars.

Some edge use cases require applications to be deployed to multiple sites. In these cases, a distributed cloud can help by serving as an environment to run applications across multiple sites, with managed connections as a single solution.

Edge Computing Architecture

Edge computing is the processing and computing of client data closer to the data source than on a centralized server or cloud-based location. In its simplest form, edge computing brings computing resources, data storage, and enterprise applications closer to where people actually use the information.

Data is at the core of any functioning business. Over the past few years, unprecedented amounts of computing power and connected devices have accumulated massive amounts of data, placing enormous pressure on the already congested Internet. This large accumulation of data creates bandwidth and latency issues. Unlike traditional enterprise computing, where data is generated on the client side or on the user’s computer, edge computing offers a better option for moving complex data management closer to the original data source.

From reducing internet load to reducing latency issues, improving response times, reducing security risks, improving application performance, deep insights and critical data analysis leading to better customer experiences, edge computing is the logical solution that every modern business needs. Is required.

An effective way to understand edge computing concepts is with this relevant example and explanation from Michael Clegg, vice president and general manager of embedded IoT at Supermicro. She said, “By processing incoming data at the edge, less information needs to be sent to and from the cloud; it’s like opening smaller branches in more areas as the pie gets cooled on the way to distant customers.”

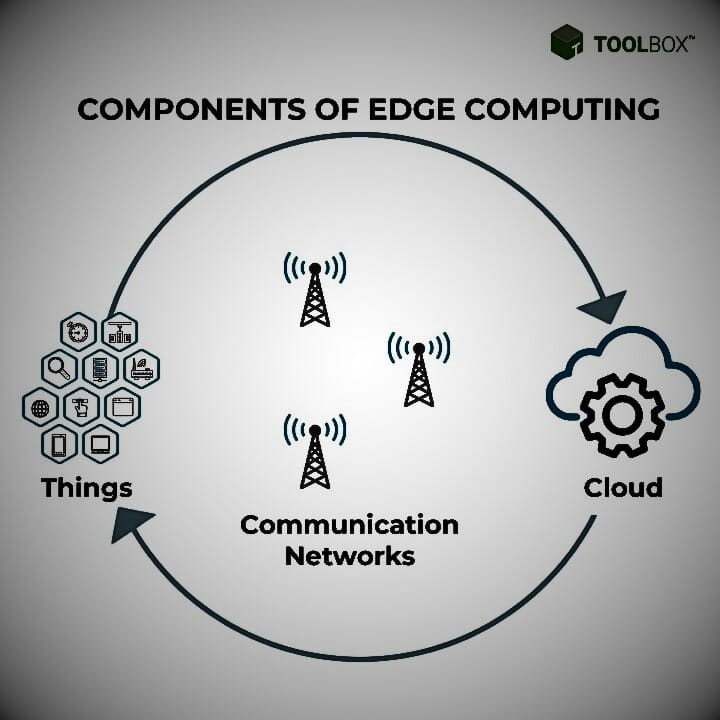

Basic Components of Edge Computing

With edge computing, location matters a lot. Access to detailed data from multiple locations enables businesses to meet future customer demands. This allows businesses to analyze critical data in real time without sending it thousands of miles away. Additionally, it is an important step forward for companies that want to build low-latency, high-performance applications.

Edge computing was gaining popularity even before we started using IoT. Its roots can be traced to content delivery networks (CDNs), and since then, it has become an undisputed necessity today.

Edge computing works in coordination with three main components. Let’s take a look at the important role each of these plays in shaping your edge infrastructure.

1. Internet of things (IoT)

The use of IoT devices has increased significantly in the last few years. Additionally, the amount of bandwidth consumed is also increasing. The sheer volume of data generated by these devices overwhelms companies’ private clouds and data centers, making it difficult to manage and store all the data.

The endless possibilities of IoT are exciting many companies. IoT has been the driver of edge computing in many ways. Edge computing exists primarily in IoT environments, where data is stored in remote locations away from central data servers. When it comes to programming IoT devices efficiently, speed is what you really need. This is why IoT and edge computing are a perfect match.

Companies that deploy IoT for edge computing capabilities closer to the device gain the ability to act on new data in seconds. Companies that fail to implement edge networks will miss out on many benefits in terms of cost, efficiency, and increased connectivity.

2. Communication networks

The rise of 5G has opened the door to many exciting innovations and developments. However, the emergence of new wireless devices, including IoT, can put pressure on networks and make it difficult to manage massive flows of virtual data. Thankfully, two powerful technologies are improving our lives: 5G communications networks and edge computing. The combination of edge computing and 5G networks is driving the digital transformation of today’s modern businesses.

Enterprises can now harness the power of comprehensive data analytics by adopting large-scale distributed computing infrastructure for edge computing. But how do these two powerful forces work? Edge computing frameworks keep data closer to the source, while the faster speeds of 5G technology deliver data to its destination as quickly as possible.

Edge computing has the potential to unlock the full potential of 5G. This enables data localization and ultra-low latency, addresses security and privacy concerns, and reduces network load. Combined with 5G, the edge will deliver the ultimate rich media user experiences and enable the vision of virtual reality/augmented reality (VR/AR), gamification, drone control, connected cars, and real-time collaboration.

3. Cloud computing

For a long time, centralized cloud computing has been the standard and undisputed leader in the IT industry. However, cloud and edge computing are often confused. Cloud computing, the predecessor of the edge, is a large device for storing and processing computer resources in central data centers. Edge computing, on the other hand, is a distributed model that is most likely to be used in applications and devices that require rapid response, real-time data processing, and critical insights.

Touted as the new big thing, edge computing is poised to become the next step in the evolution of cloud computing. Does this mean that the edge will replace the cloud? Well, that’s not likely to happen. Edge is like a cloud extension. Gartner’s report said, “Edge computing addresses the limitations of centralized computing (such as latency, bandwidth, data privacy and autonomy) by moving processing closer to the sources of data generation, things and users. We will take care of this. “

Together, they can provide productive solutions based on data collection and use for different organizational goals and uses. Edge is a great addition to the cloud, and the combination of the two can provide real-time insight into various performance initiatives. IoT and web hosting are getting the edge in faster performance, but still require a reliable cloud backend for centralized storage.

How does edge computing work?

Compared to traditional forms of computing, edge computing provides businesses and other organizations with a faster and more efficient way to process data using enterprise-grade applications. In the past, edge points generated large amounts of data, but are no longer frequently used. Mobile computing and the Internet of Things (IoT) have enabled IT architectures to be decentralized, allowing enterprises to reduce bandwidth demands on cloud servers while reducing latency and adding an additional layer of security for sensitive data. meets

The sections that follow provide a detailed description of traditional data processing processes, the challenges faced by traditional data centers, and the core concepts of edge computing.

Traditional Data Processing Process:

- In a traditional setup, data is generated on the user’s computer or client application.

- Data is sent to a remote server over a channel such as the Internet, Intranet or LAN.

- Servers store and process data according to the traditional client/server computing model.

Traditional Data Center Challenges:

- Rapid growth in data volumes and the increasing number of Internet-connected devices are placing pressure on traditional data center infrastructure.

- Gartner research estimates that 75% of enterprise-generated data will be created outside of centralized data centers by 2025.

- This large amount of data puts pressure on the Internet, causing congestion and bottlenecks.

Edge Computing Concepts:

- Edge computing provides a solution to these challenges by reversing the flow of data.

- The concept is to move data centers closer to where the data is generated, rather than bringing data into a centralized data center.

- Data center storage and computing resources are deployed as close to the data sources as possible, ideally in the same location.

Comparison of Computing Models:

| Early computing | Applications run only on one isolated computer |

| Personal computing | Applications run locally, either on the user’s device or in a data center |

| Cloud computing | Applications run in data centers and processed via the cloud |

| Edge computing | Applications run close to the user; either on the user’s device or on the network edge |

What are the benefits of edge computing?

Edge computing is in many ways the next evolution of cloud computing as 5G networks emerge across the country and the world. Today, more businesses than ever have access to comprehensive data analytics without the IT infrastructure required in previous generations. Similarly, edge computing has many potential applications, including security and medical monitoring, self-driving cars, video conferencing, and enhancing customer experience.

Edge computing has emerged as one of the most effective solutions to the network problems associated with moving large amounts of data generated in today’s world. Here are some of the most important benefits of edge computing:

1. Eliminates Latency

Latency refers to the time required to transfer data between two points on a network. The large physical distance between these two points, combined with network congestion, can cause delays. Because edge computing brings the points together, latency issues are virtually non-existent.

2. Saves Bandwidth

Bandwidth refers to the speed at which data is transferred over a network. All networks have limited bandwidth, which limits the amount of data that can be transferred and the number of devices that can process it. Edge computing enables more devices to operate with smaller, more efficient bandwidth by deploying data servers at the point where data is generated.

3. Reduces Congestion

Although the Internet has evolved over the years, the large amount of data generated daily on billions of devices can cause high levels of congestion. Edge computing has local storage, which allows the local server to perform critical edge analysis even in the event of a network failure.

Drawbacks of Edge Computing

Although edge computing has many benefits, it is still a fairly new technology and far from foolproof. Here are some of the most significant drawbacks of edge computing:

1. Establishment Cost

The cost of implementing edge infrastructure in your organization can be complex and expensive. It requires a clear scope and objective before it can be implemented, and also requires additional tools and resources to function.

2. Incomplete data

Edge computing can only handle a partial set of information that needs to be explicitly defined during implementation. Due to this, businesses may lose valuable data and information.

3. Security

Because edge computing is a distributed system, it can be difficult to ensure proper security. Processing data outside your network comes with risks. Adding new IoT devices can also increase opportunities for attackers to compromise your devices.

Best Examples and Use Cases

1) Smart Home Devices:

Implementation: Edge computing is effectively leveraged in smart home devices.

Scenario: In a smart home, multiple IoT devices collect data throughout the home.

Data Processing: First, the data is sent to a remote server for storage and processing.

Challenge: This centralized architecture can cause problems during network outages.

Benefits in Edge Computing

Edge computing brings data storage and processing closer to the smart home.

Benefits: Reduce backhaul costs and latency and ensure continuous operation even when the network is down.

2) Cloud Gaming Industry:

Applications: Edge computing is being adopted in the cloud gaming sector.

Objective: Cloud gaming companies aim to keep their servers as close to gamers as possible.

Objective: This approach reduces lag, improves the overall gaming experience, and provides gamers with an immersive gameplay environment.

Top 10 Best Edge Computing Practices to Follow in 2024

Edge computing has fundamentally redefined the way businesses operate today. It allows businesses to move towards digital transformation. IDC’s October 2019 report found that by 2023, more than 50% of new infrastructure deployments will be in increasingly critical locations rather than enterprise data centers, which is estimated to be less than 10% today

However, understanding when, where, why and how to deploy edge computing can be difficult. Here, we’ll help you learn how to accelerate your edge-enabled digital transformation efforts and drive tangible business value in terms of operational efficiency, ease of maintenance, generating opportunities for deeper insights, and more. 10 best practices to learn.

1. Assign clear ownership

Before starting an edge computing project, it is important to determine whether each party involved is aligned with the end goal. Edge computing introduces information technology (IT), which is responsible for the management technology of information processing. Next comes communication technology (CT), which is responsible for processing and transmitting information.

Finally, you need operational technology (OT), which is the technology responsible for managing and monitoring the hardware and software on your client endpoints. The challenge here is to foster collaboration and cooperation between these actors. In this case, it is important to break down silos, as one party may not be able to understand the requirements or fulfill the obligations of the other party.

2. Training of key personnel

To understand edge computing in detail, all three parties mentioned above must know how to implement the process. These three parties must not only be responsible for implementation together, but also collaborate to develop the long-term strategy, vision, budget plan, and overall course of action to support edge computing resources. Recruit skilled employees from inside and outside your organization to build the right team with clearly defined objectives and outcomes. These teams are the building blocks of your edge project, from setting up operations to maintaining efficiency and making sure everything runs smoothly.

3. Deploy the Edge as an extension of the cloud

Contrary to popular belief, edge and cloud are not competing against each other for the top spot. Instead, you can deploy the edge as a complement to the cloud. Along with the cloud, edge computing accelerates organizations’ digital transformation efforts. Implementing Edge alone is not ideal. Implementing Edge and Cloud together can effectively scale your business operations. The combination of edge computing and cloud computing can yield positive results, especially for large-scale digital transformation.

4. Understand the data analysis situation and project environment

The flow of data from various sources, such as IoT, sensors, applications, and devices, is increasing rapidly. That’s why you need to quickly analyze data to scale projects and improve the customer experience. This is especially true for facilities located in remote locations or austere units with poor connectivity and poor infrastructure. When choosing a platform, you should target one that offers simplified security and less downtime.

5. Work with trusted partners

Of course, it is paramount to partner with a vendor with a proven multi-cloud platform portfolio and a wide range of services aimed at increasing scalability, improving performance, and enhancing security in edge deployments. Another good practice is to ask the vendor important questions about security, performance, scale, engineering team costs, and actual ROI. It’s also perfectly fine to ask your product vendor for a quick demonstration of their security features and controls.

6. Address security concerns

Special attention should be paid to security. Companies need to integrate their security strategy as well as their overall cybersecurity landscape. Establishing corporate security practices alone is not enough, nor can you rely on patch management solutions every time an error is found. A smart strategy can help create a safe and clutter-free environment. When considering edge computing security, you need the same level of security and service visibility from every corner of your central data center. Start by adopting best security practices like multi-factor authentication, malware protection, endpoint security, and end-user training.

7. Think about zero trust

The risk of cyber attacks, including ransomware, is a serious concern for edge owners and operators, particularly due to the decentralized nature of their architecture. When looking for ways to reduce the risk of a breach, consider the zero trust model. Edge locations are easy to fit and comply with the Zero Trust security model. In addition to protecting edge resources from various cyber attacks and threats, enterprises need to implement encryption of data in transit and at rest.

8. Establish Architecture and Design

When making design-related decisions, it’s best to consider some existing use cases and take the time to articulate them. Not all businesses have the same needs, goals, and budgets. It is important to remember that the use cases mentioned affect the overall architecture and design of your edge computing environment. Another great option is to invest in technology that works from anywhere: on-premises, in the cloud, or at the edge. Containers and Kubernetes are examples of lightweight application technologies that facilitate application development from the cloud to the edge.

9. Use a cloud-native programming approach

Cloud-native approaches are often used in distributed computing environments to address problems caused by incompatible development platforms and security frameworks. This is best done by clustering and containerizing the workload around a set of microservices. Use APIs to support interoperability and provide new services that were not previously supported.

10. Consider service level agreements, compliance and support

Finally, it is important to check service level agreements (SLAs) and compliance beforehand. In today’s fast-paced business world, slowdowns and downtime can be devastating to your business. All collected data and information must be protected from falling into the hands of dangerous parties. Therefore, it is important to consider everything from maintenance to flexibility, security, scalability, and stability. Additionally, it is important to ensure that your edge computing environment is robust enough to withstand technological changes and simple enough to upgrade over time.

FAQs

1) How does edge computing work?

Edge computing works by processing data where it is needed, closer to the devices and people using it. This means that data on user devices, IoT gadgets, etc. is analyzed and decisions are taken immediately.

2) What is an example of edge computing?

An example of edge computing can be found in employee safety and security. Data from on-site cameras, security devices and sensors is processed to prevent unauthorized access to the site and monitor employee compliance with security policies.

3) What is edge computing vs. cloud computing?

Edge computing processes time-sensitive data, while cloud computing processes data that is not time-sensitive.

4) Where is edge computing used?

Edge computing is used in a wide variety of applications, including IoT devices, self-driving cars, industrial automation, and even worker safety on construction sites. It’s all about processing data closer to where it is needed to make faster and more efficient decisions.

You should also be aware of several other aspects of cloud computing.

- The Ultimate Guide to Cloud Computing: 10 Steps to Implementation Success

- Transforming Technology 2024: Explore the Evolution of Cloud Computing Architecture

- 13 major differences between IAAS PAAS and SAAS: Here you know

- Virtualization in Cloud Computing: 6 Types, Architecture, and Advantages

- Cloud Deployment Models: definition, types, comparisons and examples

- Top 7 best Cloud Certifications Courses (2024)

follow me : Twitter, Facebook, LinkedIn, Instagram

4 thoughts on “Edge Computing: Definition, Concept, Uses and top 10 best practices cases in 2024”

Comments are closed.