Generative AI is a type of artificial intelligence technology that can generate many different types of content, including text, images, audio, and synthetic data. The recent buzz about generative AI is driven by the simplicity of new user interfaces that allow you to create high-quality text, graphics, and video in seconds.

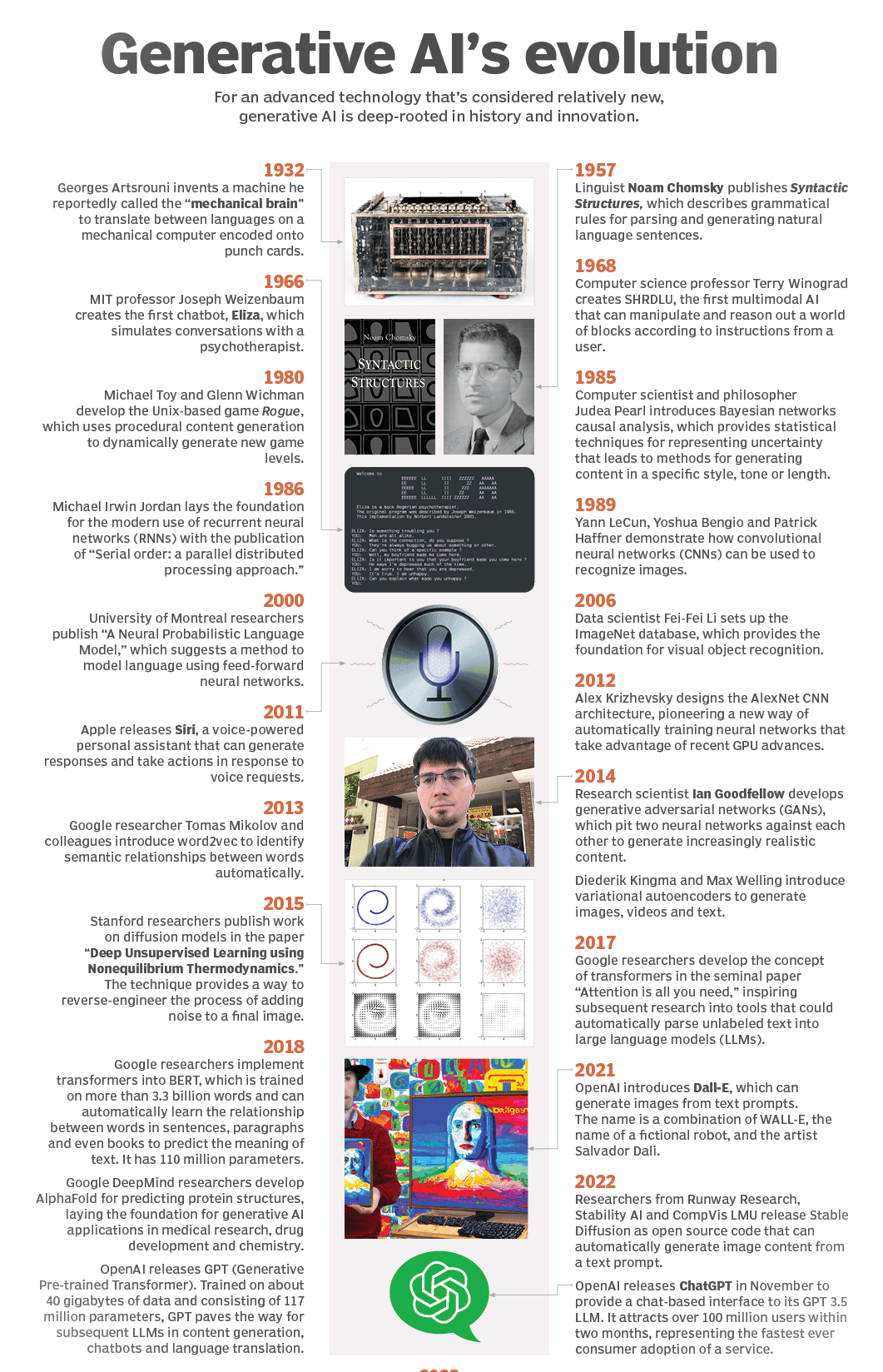

Note that this technology is not completely new. Generative AI was introduced in chatbots in the 1960s. However, it was not until the introduction of generative adversarial networks (GANs) (a type of machine learning algorithm) in 2014 that generative AI was able to reliably create authentic images, videos, and audio of real people. It was after that.

On the other hand, this new feature has opened up opportunities like better movie dubbing and richer educational content. It also addresses concerns about harmful cybersecurity attacks against companies, such as deepfakes (digitally forged images and videos) and fraudulent claims that realistically impersonate an employee’s boss.

Two recent developments, detailed below, are playing a key role in bringing generative AI into the mainstream. Transformers and the revolutionary language models they made possible. Transformers are a type of machine learning that allows researchers to train increasingly large models without having to label all the data beforehand. So new models can be trained on billions of pages of text, potentially yielding deeper answers. Additionally, Transformers unlocked a new concept called attention, allowing models to track connections between pages, chapters, and words in books in addition to just individual sentences. These are not just words. Transformers can also use their ability to trace connections to analyze codes, proteins, chemicals, and DNA.

Rapid advances in so-called large-scale language models (LLM), models with billions or even trillions of parameters, are enabling generative AI models to write attractive text or draw photorealistic images. A new era has opened up where you can create new things and even more interesting things. Sitcom is spot on. Additionally, innovations in multimodal AI allow teams to create content in multiple types of media, including text, graphics, and video. This is the basis of tools like Dall-E that automatically create images from text descriptions and generate text captions from images.

How does generative AI work?

Generative AI starts with a signal in the form of text, images, videos, designs, musical notes, or any input that an AI system can process. Various AI algorithms then respond to the signals and return new content. Content may include essays, problem solving, or realistic mistakes created from pictures and the voices of people.Early versions of generative AI required sending data through APIs or other complex processes. Developers had to become familiar with specialized tools and use languages like Python to write applications.

Today, generative AI leaders are developing better user experiences that can explain requests in easy-to-understand terms. After initial feedback, you can also customize the results with feedback on style, tone, and other elements you want reflected in the content generated.

What are generative AI models?

Generative AI models combine different AI algorithms to represent and process content. For example, text preparation involves using various natural language processing techniques to convert raw text (letters, punctuation, words, etc.) into sentences, parts of speech, units, and actions, and using multiple encoding techniques. Is. And express it as a vector. Similarly, images are transformed into various visual elements and also represented as vectors. One caveat is that these techniques can sneak bias, racism, deception, and exaggeration into the training data.

Once developers decide how to represent the world, they apply specific neural networks to generate new content in response to questions or prompts. Technologies such as GANs and variational autoencoders (VAEs) (neural networks with decoders and encoders) are suitable for generating realistic human faces, synthetic data for AI training, and even specific human replicas. I am.

Recent advances in algorithms such as Google’s Bidirectional Encoder Representations from Transformers (BERT), OpenAI’s GPT, and Google AlphaFold have enabled neural networks that can not only encode language, images, and proteins but also generate new content. Can do.

How Neural Networks Are Changing Generative AI

Since the early days of AI, researchers have been creating AI and other tools to generate content programmatically. Early approaches, known as rule-based systems and later as “expert systems,” used explicitly created rules to generate responses or data sets.

Neural networks, which form the basis of many of today’s AI and machine learning applications, have turned the problem upside down. Neural networks are designed to mimic the way the human brain works, which “learns” rules by finding patterns in existing data sets. The first neural networks developed in the 1950s and 1960s were limited by a lack of computational power and small data sets. Neural networks did not become practical for content creation until the advent of big data and improvements in computer hardware in the mid-2000s. The field gained momentum when researchers discovered how to run neural networks in parallel on graphics processing units (GPUs) used in the computer gaming industry to render video games. New machine learning techniques developed over the past decade, such as the aforementioned Generative Adversarial Networks and Transformers, have set the stage for recent impressive advances in AI-generated content.

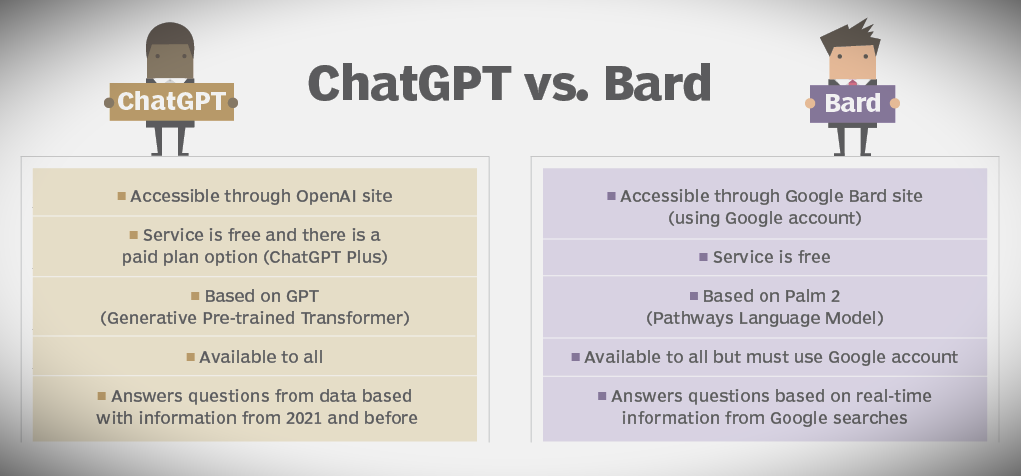

What are Dall-E, ChatGPT, and Bard?

ChatGPT, Dell-E, and BARD are common generative AI interfaces.

Dall-E. Dall-E is an example of a multimodal AI application that identifies connections across multiple media, such as vision, text, and audio, trained on large data sets of images and associated text descriptions. In this case, we connect the meaning of a word to a visual element. It was created in 2021 using OpenAI’s GPT implementation. A second, more capable version, the Dall-E 2, was released in 2022. It allows users to generate images in multiple styles through user prompts.

The AI-powered chatbot that took the world by storm in November 2022 was built on the GPT-3.5 implementation of OpenAI. OpenAI provided a way to interact and improve text responses through a chat interface with interactive feedback. Previous versions of GPT were only accessible through the API. GPT-4 was released on March 14, 2023. ChatGPT incorporates the history of interactions with users into its results, simulating real conversations. Following the incredible popularity of the new GPT interface, Microsoft announced another significant investment in OpenAI and integrated a version of GPT into its Bing search engine.

Bard. Google was also a pioneer and early leader in Transformer AI technology for processing language, proteins, and other types of content. We have made some of these models open to researchers. However, public interfaces were not released for these models. Microsoft’s decision to implement GPT in Bing prompted Google to bring to market Google Bard, a consumer chatbot built on a lightweight version of the LaMDA family of large-scale language models. Google suffered a significant decline in stock price after Bird’s hasty launch because its language model incorrectly stated that the Webb telescope was the first telescope to discover a planet in an alien solar system. Meanwhile, Microsoft and its ChatGPT implementation also suffered in their early attempts due to inaccurate results and unstable behavior. Google then announced a new version of Bard built on its most advanced LLM, PaLM 2. This allows Bard to answer user questions more efficiently and visually.

What are the use cases for generative AI?

Generative AI can be applied to a wide variety of use cases to generate virtually any type of content. Thanks to cutting-edge breakthroughs like GPT, which can be tailored to suit different applications, this technology is becoming more accessible to all types of users. Generative AI use cases include:

- Deploy chatbots for customer service and technical support.

- Deploying deepfakes that mimic people or specific individuals.

- Improvements in the dubbing of films and educational materials in different languages.Write email responses, dating profiles, resumes, and periodic reports.

- Create realistic art in a particular style.

- Better product demo videos.

- Suggest new drug compounds for testing.

- Design of physical products and buildings.

- Adaptation to new chip designs.

- Writing music in a particular style or tone.

What are the benefits of generative AI?

Generative AI can be widely applied in many areas of business. This makes it easier to interpret and understand existing content and create new content automatically. Developers are looking for ways to improve existing workflows, with the goal of completely customizing them so that generative AI can take advantage of the technology. Some potential benefits of implementing generative AI include:

- Automate the manual process of content creation.

- Reduce the effort required to respond to emails.

- Improve answers to specific technical questions.

- Create realistic representations of people.

- Convert complex information into a coherent narrative.

- Simplify the process of creating content in a specific style.

What are the limitations of generative AI?

Early implementations of generative AI clearly demonstrate many of its limitations. Some of the challenges presented by generative AI arise from the specific approaches used to implement specific use cases. For example, an overview of a complex topic is easier to read than an explanation that includes a variety of sources to support key points. However, the readability of the summary comes at the cost of allowing users to check the source of the information.

Here are some limitations to consider when implementing or using generic AI apps:

- It is not always possible to identify the source of the content.

- Bias in the original source may be difficult to assess.

- Realistic content makes it difficult to identify misinformation.

- It may be difficult to understand how to adjust to new circumstances.

- The results may conceal prejudice, bigotry, and hatred.

What are the concerns surrounding generative AI?

The rise of generic AI also raises many concerns. These are about the quality of the results, the potential for abuse, and the potential to disrupt existing business models. Here are some specific types of problems arising from the current state of generative AI.

- You may be provided with incorrect and misleading information.

- It is more difficult to trust information when you do not know where it comes from.

- May give rise to new forms of plagiarism that ignore the rights of content creators and artists of original content.

- Existing business models built around search engine optimization and advertising may be disrupted.

- Fake news is more likely to be generated.

- It would be easy to claim that actual photographic evidence of fraud generated by AI is fake.

- Can impersonate people for more effective social engineering cyberattacks.

What are some examples of generative AI tools?

Generative AI tools exist for a variety of modalities, including text, images, music, code, and audio. Some common AI content generators to consider include:

- Text generation tools include GPT, Jasper, AI-Writer, and Lex.

- Image generation tools include Dell-E2, MidJourney, and Stable Diffusion.

- Music production tools include Amper, Dadabots, and Musenet.

- Code generation tools include CodeStarter, Codex, GitHub Copilot, and Tabnine.

- Speech synthesis tools include Descript, ListNR, and Podcast.ai.

- AI chip design tool companies include Synopsis, Cadence, Google, and Nvidia.

Use cases for generative AI by industry

Emerging generic AI technologies are sometimes described as general-purpose technologies, similar to the steam engine, electricity, and computing, as they have the potential to significantly impact many industries and use cases. It often takes decades to find the best way to organize workflows to take advantage of new approaches rather than simply speeding up parts of existing workflows, as with previous general-purpose technologies. It is important to keep this in mind. Here are some of the ways generative AI applications could impact different industries.

- Finance departments can monitor transactions based on an individual’s history to create better fraud detection systems.

- Law firms can use generative AI to design and interpret contracts, analyze evidence, and propose arguments.

- Manufacturers can use generative AI to combine data from cameras, X-rays, and other metrics to more accurately and economically identify defective parts and root causes.

- Film and media companies can use generative AI to more economically produce content and translate it into other languages with actors’ own voices.

- The healthcare industry can use generative AI to more efficiently identify promising drug candidates.

- Architecture companies can use generative AI to rapidly design and customize prototypes.

- Game companies can use generative AI to design game content and levels.

[icon name=”hand-point-right” prefix=”fas”] Related useful topics you need to know

Generative AI vs. AI

Generative AI focuses on creating new and original content, chat responses, designs, synthetic data, and even deep fakes. They are particularly valuable in creative fields and innovative problem-solving because they can autonomously generate a variety of new outputs. As mentioned above, generative AI relies on neural network technologies such as transformers, GANs, and VAEs. Other types of AI typically use techniques such as convolutional neural networks, recurrent neural networks, and reinforcement learning.

Generative AI often begins with a prompt where the user or data source can submit an initial query or data set to guide content creation. This can be an iterative process to find variations in your content. On the other hand, traditional AI algorithms often process data and produce results according to a predefined set of rules. Both approaches have advantages and disadvantages, depending on the problem being solved. Generative AI is better suited for tasks that involve NLP or require the creation of new content, while traditional algorithms are better suited for tasks that do not involve NLP. Involves or requires the creation of new material. .Effective for tasks with predetermined outcomes. Since then, advances in other neural network technologies and architectures have expanded generative AI capabilities. Techniques include VAE, long-short-term memory, transformers, diffusion models, and neural radiation fields.

Best practices for using generative AI

Best practices for using generic AI vary depending on the modality, workflow, and desired goals. That said, it is important to consider important factors such as accuracy, transparency, and ease of use when working with generative AI. The following exercises will help you achieve these elements.

- Clearly label all generated AI content for users and consumers.

- Verify the accuracy of the content generated using primary sources, where applicable.

- Consider how bias is built into the AI results generated.

- Double-check the quality of AI-generated code and content using other tools.

- Learn the strengths and limitations of each generator AI tool.

- Understand and avoid common failure modes and their consequences.

What is the main goal of generative AI?

ChatGPT’s incredible depth and ease of use have fueled the widespread adoption of generative AI. Indeed, the rapid adoption of generic AI applications presents some challenges to deploying this technology safely and responsibly. However, these initial implementation issues prompted research into better tools for detecting AI-generated text, images, and videos.

Indeed, the popularity of generative AI tools like ChatGPT, MidJourney, Stable Diffusion, and Bard has also facilitated an endless variety of training courses for all professional levels. Many are aimed at helping developers create AI applications. Some companies focus on business users who want to implement new technology across the enterprise. At some point, industry and society will also create better tools to track the provenance of information and create more trustworthy AI.

Generative AI will continue to evolve, taking over translation, drug discovery, anomaly detection, and the creation of new content from text and video to fashion design and music. While these new one-off tools are great, the biggest impact of future generative AI will come from integrating these capabilities directly into the tools we already use. For example, the grammar checker will continue to be improved. Design tools embed more useful recommendations directly into your workflow. Training tools automatically identify best practices in parts of your organization, allowing you to train other employees more effectively. These are some of the ways in which generic AI will change our behavior in the near future. We don’t know what impact generic AI will have in the future. But as we continue to leverage these tools to automate and enhance human tasks, we will inevitably have to reevaluate the nature and value of human expertise. Many companies also adapt AI generated based on their own data to help improve branding and communications. Programming teams use generative AI to apply company-specific best practices to write and format more readable and consistent code. Vendors integrate generative AI capabilities into additional tools to streamline the content creation workflow. This drives innovation in how these new features improve productivity.

Generative AI can also play a role in various aspects of data processing, transformation, labeling, and inspection as part of an augmented analytics workflow. Semantic web applications can use generative AI to automatically map internal classifications that describe job skills to different classifications on skills training and recruiting sites. Similarly, business teams use these models to transform and label third-party data to enable more advanced risk assessment and opportunity analysis capabilities. In the future, generic AI models will be expanded to support 3D modeling, product design, drug development, digital twins, supply chains, and business processes. This makes it easier to generate new product ideas, experiment with different organizational models, and explore different business ideas.

What are the modern generic AI technologies?

- Agent GPT: Agent GPT is a generative artificial intelligence tool that allows users to create autonomous AI agents that can delegate various tasks.

- AI Art (Artificial Intelligence Art): AI art is any form of digital art created or enhanced with AI tools.

- AI prompt: Artificial intelligence (AI) signals are methods of interaction between humans and LLMs that allow models to generate desired outputs. This interaction can be in the form of questions, text, code snippets, or samples. Artificial intelligence (AI) prompt engineers specialize in creating text-based prompts or prompts that can be interpreted and understood by language models and generative AI tools at scale.

- amazon cornerstone: Amazon Bedrock, also known as AWS Bedrock, is a machine learning platform used to build generative artificial intelligence (AI) applications on the Amazon Web Services cloud computing platform.

- Automatic GPT: Auto-GPT is an experimental open-source autonomous AI agent based on the GPT-4 language model that autonomously strings together tasks to achieve big-picture goals set by users.

- Autonomous artificial intelligence: Autonomous artificial intelligence is a field of AI in which systems and devices are advanced enough to operate with limited human oversight and involvement.

- Google Gemini: Google Gemini is a large family of multimodal artificial intelligence (AI) language models with capabilities to understand language, audio, code, and video.

- Google search generation experience: Google Search Generative Experience (SGE) is a set of search and interface features that integrate AI-powered generated results into Google search engine query responses.

- Google Search Lab (GSE): GSE is an effort by Alphabet’s Google division to provide a preview of new features and experiments in Google Search before they are available to the public.

- Graph Neural Network (GNN): Graph neural networks (GNN) are a type of neural network architecture and a deep learning technique that helps users analyze graphs and make predictions based on the data described by the graph’s nodes and edges.

- Image to image conversion: Image-to-image transformation is a generative artificial intelligence (AI) technique that transforms a source image into a target image while preserving some of the visual characteristics of the original image.

- starting score: Inception Score (IS) is a mathematical algorithm used to measure or determine the quality of images created by generative AI through generative adversarial networks (GANs). The term “inception” refers to the spark of creativity, or the first beginning of thought and action traditionally experienced by humans.

- ml knowledge graph: In the field of machine learning, a knowledge graph is a graphical representation that captures connections between different entities. It consists of nodes representing entities or concepts and edges representing relationships between those entities.

- notch series: Lang chain is an open source framework that allows software developers working with artificial intelligence (AI) and its machine learning subsets to develop LLM-powered applications by combining large-scale language models with other external components.

- Masked Language Model (MLM): MLM is used to train language models in natural language processing tasks. In this approach, certain words and tokens are randomly hidden or masked within a given input, and the model is directed to predict these hidden elements using the context provided by the surrounding words.

- Q-learning: Q-learning is a machine learning approach that allows a model to learn and improve over time by performing the correct tasks.

- Reinforcement Learning from Human Feedback (RLHF): RLHF is a machine learning approach that combines human guidance with reinforcement learning techniques such as rewards and comparisons to train an AI agent.

- search extension generation: Search Augmentation and Generation (RAG) is an artificial intelligence (AI) framework that retrieves data from external knowledge sources to improve the quality of responses.

- Pre-training of search enhancement language models: A search augmented language model, also known as REALM or RALM, is an AI language model designed to take text and use it to perform query-based tasks.

- Semantic Network (Knowledge Graph): A semantic network is a knowledge structure that shows how concepts relate to each other and how they are interconnected. Semantic networks use AI programming to mine data, connect concepts, and draw attention to relationships.

- Variational Autoencoder (VAE): Variational Autoencoder is a generative AI algorithm that uses deep learning to generate new content, detect anomalies, and remove noise.

- vector embedding: Vector embeddings are numerical representations that capture the relationships and meanings of words, phrases, and other data types.

follow me : Twitter, Facebook, LinkedIn, Instagram

6 thoughts on “What Is Generative AI? 7 Best Information You Need To Know”

Comments are closed.