OpenAI is on the verge of releasing the next version of its generative AI model, GPT-5. According to a report from Business Insider, the release schedule has not been officially confirmed but is expected to be in the middle of the year, possibly in the summer. The new version of the large-scale language model is expected to be more capable than the GPT-4 model introduced last year. OpenAI CEO Sam Altman hinted at several releases this year on a podcast with Lex Friedman. He did not go into details, saying it had not been decided yet, and did not specify any names, but he confirmed that OpenAI “will be releasing some great new models this year.”

GPT-5 is expected to be released in the summer, BI reports, citing people familiar with OpenAI’s plans. However, this schedule is not set in stone and is subject to change depending on various factors.

The report also suggests that OpenAI is demonstrating GPT-5 for some enterprise customers. GPT-5 enhancements can provide a significantly better experience than previous versions.

Regarding training and security measures, the report states that GPT-5 is currently in the training phase. This will be followed by extensive security testing, including an internal ‘Red Team’ process. This involves a rigorous assessment to identify and resolve potential issues prior to publication.

Enterprise sales are expected to be OpenAI’s main source of revenue and could benefit significantly from the release of GPT-5. The updated model is expected to further strengthen the value proposition for enterprise customers.

It remains to be seen how OpenAI will address access to and use of its training data, much of which is protected by copyright. OpenAI, along with other technology companies, is leading the regulatory discussion around the use of such data to improve AI models.

👉 What is CrewAI? 12 Exciting Use Cases for CrewAI in Real-World AI Projects

What is GPT-5?

Generative Pre-trained Transformers (GPTs) are a set of large-scale language models (LLMs) developed by OpenAI that have had a major impact on both the ML and AI fields.

GPT is essentially designed to understand and generate human-like text based on received input. These models are trained on huge datasets. The GPT family of models has helped popularize LLM-based applications and set new standards for what is possible in natural language processing, generation, and more. GPT-5 represents the next iteration of the GPT series.

When will GPT-5 be launched?

In a meeting between Sam Altman and Bill Gates in January 2024, Gates received confirmation that work on GPT-5 had begun, without specifying when the release date would be.

Considering what happened with GPT-4, we can predict what will happen with the launch of GPT-5. Although OpenAI released GPT-4 just a few months after ChatGPT, we know that the development cycle of GPT-4 took more than two years, including the training phase, development, and testing.

Therefore, if GPT-5 follows the same schedule, its launch could be pushed to late 2025. Although this new launch still seems far away, it does not mean that OpenAI will not continue to improve GPT-4.

OpenAI will likely continue to improve GPT-4, and as we have already seen with GPT-3.5, an interim update, GPT-4.5, may be introduced.

What features can we expect from GPT-5?

The release of GPT-5 may take a year or two, and most predictions about its progress are based on current trends shaped by Google and open-source AI initiatives. These developments provide us with valuable insights into the future direction of the industry.

However, some of the first clues are coming straight from the OpenAI core team. In his interview with Gates, Altman emphasized that OpenAI efforts will focus on enhancing inference capabilities and incorporating video processing capabilities.

So, let’s understand a little bit about everything and discuss some of the key enhancements you can expect from GPT-5.

Parameter size

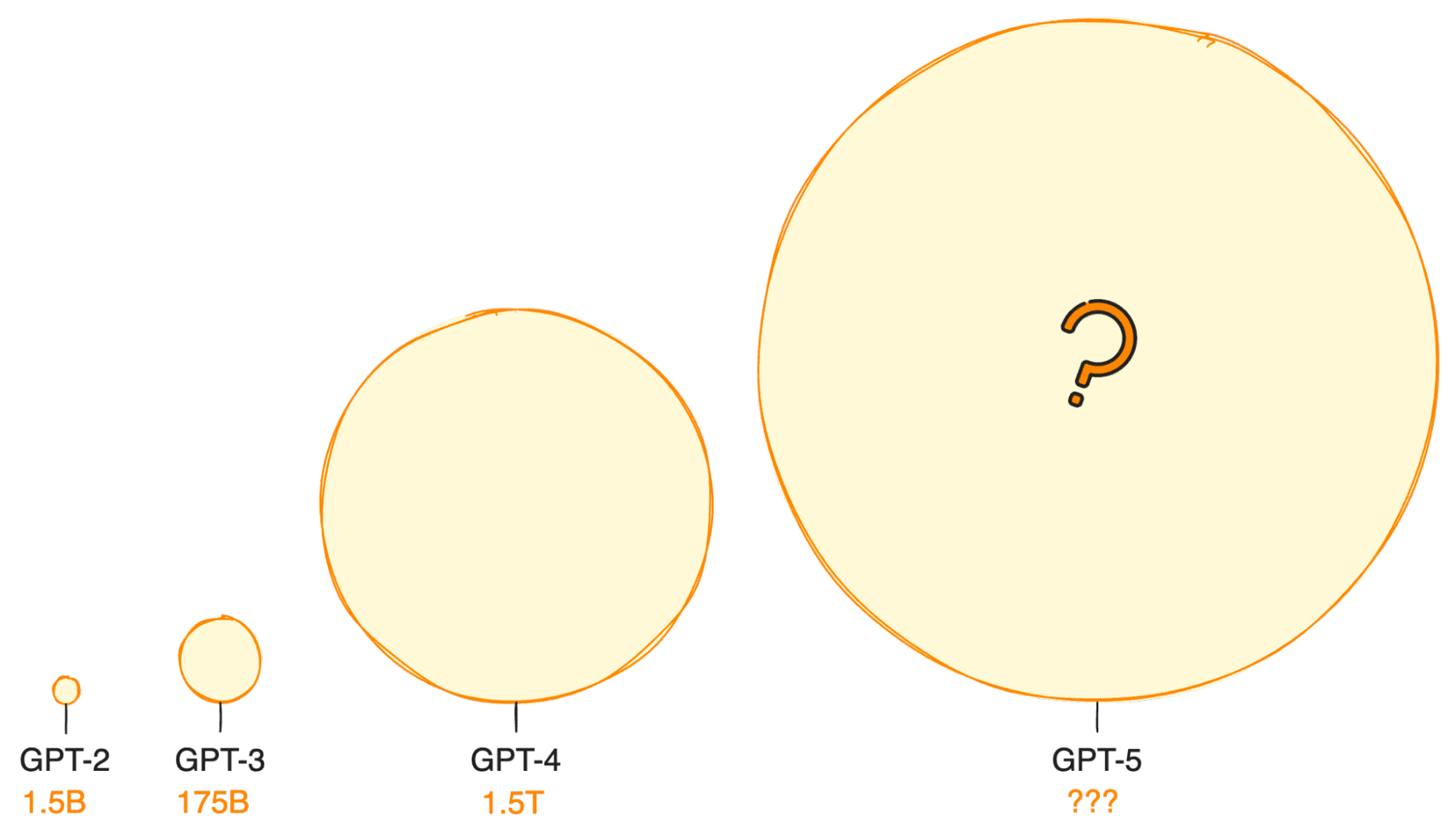

Although the exact parameter sizes of GPT-4 remain secret, the trend towards more complex and functional models continues. Most sources indicate that this number may be around 1.5 trillion parameters.

If this trajectory continues, GPT-5 could redefine the boundaries of current LLMs and deliver unprecedented scale.

Multi-modal

Given that existing GPT-4 models already support audio and image capabilities, integrating video processing emerges as a natural progression for GPT-5. We’ve already seen Google start experimenting with this feature in its Gemini models, so it’s only a matter of time before the competition will force OpenAI to do something new as well.

Therefore, GPT-5 improves on the current GPT-4 multimodal capabilities and adds new features like video integration, bringing about a significant change in the way we interact with AI and allowing for more natural and versatile formats.

From chatbot to agent

The transition from chatbots to fully autonomous agents is another exciting frontier. Imagine being able to assign simple tasks to apps powered by GPT. If OpenAI continues to integrate third-party services, this may indeed become a reality. We are already seeing the introduction of custom GPT, and this is likely to continue to grow.

This new feature allows GPT-5 to connect to a variety of services and function seamlessly around the world, allowing it to perform tasks on your behalf without direct human supervision. For example, we could ask an autonomous agent to buy groceries based on our dietary preferences.

Better accuracy

With each iteration, the GPT model became more accurate and more reliable in understanding the context and generating appropriate responses. The next generation of GPT models means an increase in the size and diversity of the training dataset.

The current GPT-4 model is 40% better than the previous generation, GPT-3, so GPT-5 is expected to continue this trend, reducing errors and increasing interaction fidelity.

Enhanced Context Window

One of the limitations of the current model is the size of the context window that can be considered to generate a response. Given that GPT-5 can be trained on large amounts of data, the context window has been expanded to allow you to understand and contextualize larger chunks of text, making it more relevant to your context. And some of the output will be relevant.

Cost-effective use of OpenAI API

As new models emerge, we can also expect the cost of using the OpenAI API to decrease, making technologies like GPT-4 and GPT-3.5 more accessible. The launch of GPT-5 could make GPT-4 more accessible and cheaper to use.

This democratization of access could lead to a wave of innovation, allowing a wider range of developers and organizations to integrate advanced AI into their applications.

As GPT models become cheaper and more accessible, they may become more efficient at performing complex tasks such as coding and research. If you haven’t tried OpenAI’s API yet, we highly recommend you follow DataCamp’s OpenAI API guide to give it a try.

How to use GPT-5

A release date for GPT-5 has not been announced yet, but it is safe to say that it is in development. (OpenAI had been working on GPT-4 for at least two years before it was officially released.) It’s also possible that OpenAI will deploy an intermediate GPT-4.5 before GPT-5, as it did with 3.5. To date, there has been no official statement from OpenAI regarding the timing of the release.

OpenAI’s pricing is structured so that ChatGPT Plus customers can use the latest models at $20 per month, while free customers can use older versions. You can select the model you want to work with from the ChatGPT drop-down menu. What GPT-4 looks like today:

Conclusion

While we await specific details regarding GPT-5, our current discussion focuses on historical facts, general trends in AI, and several factors that the OpenAI team appears to share. It is important to remember that this is based on guesswork and only makes predictions based on small signals.

History suggests that in the medium term, we will likely see incremental updates like GPT-4.5 before GPT-5 arrives.

Regardless of the timeline, the evolution of the GPT series continues to captivate the imagination, promising a future where the possibilities of AI are limited only by our ability to imagine its applications.

👉 You should know more in details about AI model

follow me : Twitter, Facebook, LinkedIn, Instagram