What is artificial intelligence?

You will find definitions of different types of artificial intelligence in different articles, but, according to John McCarthy, artificial intelligence is a computer program that follows human intelligence through computers.

Artificial intelligence (AI) is a computer system that processes human intelligence through a machine. Some typical applications include natural language processing (NLP), speech recognition, and machine vision.

What is the application of artificial intelligence?

In the real world, many applications of AI currently exist. Here are some of the uses and applications of artificial intelligence:.

- Speech recognition, Speech recognition is also called automatic speech recognition; this is called computer speech recognition. This human speech is written with the help of natural language processing (NLP). Nowadays, this feature is seen on many mobile devices, like Siri. Siri uses the user’s voice.

- Computer vision: This application allows digital images, video, and visual input through a computer system to deliver meaningful information. Computer vision is used in photo tagging and social media. There are other examples of this, like radiology, medicine, self-driving cars, and many more.

- Recommendation Engine: AI algorithms use historical consumer behavior data to help discover data trends that can be used to develop more effective cross-selling strategies. It is used to recommend relevant add-ons to customers during the checkout process at an online retailer.

- Automated stock trading: AI-powered high-frequency trading platforms designed to optimize stock portfolios execute thousands or even millions of trades per day without human intervention.

Examples and uses of artificial intelligence technology

- Reinforcement learning. Although the dataset is not labeled, it provides feedback to the AI system after performing one or more actions.

- Machine vision: This technology gives machines the ability to see. Machine vision uses cameras, analog-to-digital conversion, and digital signal processing to capture and analyze visual information. Although often compared to human vision, machine vision is not tied to biology and can, for example, be programmed to see through walls. It is used in a wide variety of applications, from signature recognition to medical image analysis. Computer vision focuses on machine-based image processing and is often confused with machine vision.

- Natural Language Processing (NLP). It is the processing of human language by a computer program. The oldest and best-known example of NLP is spam detection, which examines the subject and body of an email to determine whether it is spam. Current approaches to NLP are based on machine learning. NLP tasks include text translation, sentiment analysis, and speech recognition.

- Robotics. This engineering field focuses on the design and manufacturing of robots. Robots are often used to perform tasks that are difficult for humans to perform or that are difficult to perform consistently. For example, robots are used on car manufacturing assembly lines and by NASA to move large objects in space. Researchers are also using machine learning to create robots that can interact in social environments.

- Self-driving car. Self-driving cars use a combination of computer vision, image recognition, and deep learning to create automated skills to steer the vehicle while staying in a specific lane and avoiding unexpected obstacles such as pedestrians. Meat.

- Creation of text, images, and audio. Generative AI techniques that create different types of media from text prompts are being widely used across enterprises to create unlimited types of content, from photorealistic art to email responses and screenplays. Was implemented.

Artificial intelligence is used in various fields. Some examples are given below

- AI in health care The biggest stakes are to improve patient outcomes and reduce costs. Companies are using machine learning to make better and faster medical diagnoses than humans. One of the most famous health care technologies is IBM Watson. Understand natural language and be able to answer natural language questions. The system mines patient data and other available data sources to generate hypotheses and present them using a confidence scoring scheme. Other AI applications include using online virtual medical assistants and chatbots to help patients and health care customers find medical information, schedule appointments, understand billing processes, and complete other administrative processes. These include: A variety of AI technologies are also being used to predict, fight, and understand pandemics such as the coronavirus disease (COVID-19).

- AI in business Machine learning algorithms are integrated into analytics and customer relationship management (CRM) platforms to gain insights on how to better serve customers. Chatbots are integrated into websites to provide immediate service to customers. The rapid advancement of generic AI technologies like Chat GPT is expected to have far-reaching effects, including job cuts, changes in product design, and disrupting business models.

- AI in education AI automates grading, freeing up teachers to spend more time on other tasks. Assess your students, adapt to their needs, and help them learn at their own pace. AI tutors can provide extra support to students and keep them on track. This technology could also change where and how students learn and perhaps even replace some teachers. As demonstrated by Chat GPIT, Google Bard, and other large-scale language models, generative AI can help teachers create curriculum and other content and engage students in new ways. The advent of these tools also requires teachers to rethink student assignments and tests and modify plagiarism policies.

- AI in finance. AI in personal finance applications like Intuit Mint and TurboTax is disrupting financial institutions. Such applications collect personal data and provide financial advice. Other programs, such as IBM Watson, have been applied to the home-buying process. Today, artificial intelligence software drives most of the trading on Wall Street.

- AI in law The legal discovery process, or reviewing documents, is often a difficult task for humans. Using AI to automate labor-intensive processes in the legal industry can save time and improve customer service. Law firms use machine learning to describe data and predict outcomes, use computer vision to classify and extract information from documents, and use NLP to interpret requests for information. Are.

- AI in entertainment and media Entertainment businesses use AI technology for targeted advertising, content recommendations and delivery, fraud detection, screenwriting, and film production. Automated journalism helps newsrooms streamline media workflows while reducing time, costs, and complexity. Newsrooms use AI to automate routine tasks like data entry and proofreading. Research topics and help with titles. Questions remain about how journalism can reliably use Chat GPT and other generative AI to generate content.

- AI in software coding and IT processes. New generative AI tools can generate application code based on natural language signals, but these tools are still in their infancy and are unlikely to replace software engineers in the near future. AI is also being used to automate many IT processes, including data entry, fraud detection, customer service, predictiveness, and security.

- Security. Security vendors rank AI and machine learning high on the list of buzzwords they use to market their products, so buyers should approach them with caution. Nevertheless, AI techniques have been successfully applied to various aspects of cybersecurity, such as detecting anomalies, solving false positive problems, and analyzing behavioral threats. Organizations use machine learning in security information and event management (SIEM) software and related areas to detect anomalies and identify suspicious activity that signals a threat. By analyzing data and using logic to identify similarities to known malicious code, AI can counter new attacks much faster than human workers or previous iterations of the technology.

- AI in banking. Banks have successfully deployed chatbots to inform customers about services and offers and process transactions without human intervention. AI virtual assistants are used to improve and reduce the cost of banking regulatory compliance. Banking organizations are using AI to improve lending decisions, set loan limits, and identify investment opportunities.

- AI in transportation sector Manufacturing has been at the forefront of incorporating robots into the workflow. In addition to its fundamental role in the operation of autonomous vehicles, AI technology is also being used in the transportation sector to manage traffic, predict flight delays, and improve the safety and efficiency of maritime transportation. In the supply chain, AI is replacing traditional methods of forecasting demand and anticipating disruption. This trend was accelerated by COVID-19, when many companies became distressed by the impact of the global pandemic on the supply and demand of goods.

As the hype around AI grows, vendors are making efforts to promote how AI is used in their products and services. Often, what they call AI is just a component of technologies like machine learning. AI requires specialized hardware and software infrastructure to write and train machine learning algorithms. Although no single programming language is synonymous with AI, Python, R, Java, C++, and Julia have features that are popular among AI developers.

Generally, AI systems work by ingesting large amounts of labeled training data, analyzing correlations and patterns in the data, and using these patterns to predict future situations. This way, given examples of text, chatbots can learn to generate authentic-like conversations with people. Image recognition tools can also learn to recognize and describe objects in images by considering millions of examples. New and rapidly advancing generic AI technology allows you to create realistic text, images, music, and other media.

Types of artificial intelligence

Erlend Hintz, assistant professor of integrative biology and computer science and engineering at Michigan State University, said AI can be classified into four types. AI begins with task-specific intelligent systems widely used today and evolves into perceptual systems. Still exists. The categories are as follows:.

- Reactive Machine. These AI systems have no memory and are task-specific. An example is Deep Blue, IBM’s chess program that defeated Garry Kasparov in the 1990s. Deep Blue can identify and predict pieces on a chessboard, but because he has no memory, he cannot transfer past experiences to future experiences.

- Limited memory. These AI systems have memories, so they can use past experience to make future decisions. Some of the decision-making capabilities of self-driving cars are designed this way.

- Theory of Mind. The theory of mind is a psychological term. When applied to AI, it gives systems the social intelligence to understand emotions. This type of AI would be able to infer human intentions and predict behavior. This is a skill that AI systems need to become essential members of human teams.

- self-aware. In this category, AI systems are self-aware, which gives them consciousness. A self-aware machine understands its current state. This type of AI does not exist yet.

On the basis of the nature of artificial intelligence, it can be classified in two ways:

Weak AI and strong AI

👉Weak AI (also known as narrow AI or narrow artificial intelligence (ANI)) is AI that is trained and focused on performing a specific task. Most of the AI around us today is powered by weak AI. This type of AI is far from weak, so “narrow” might be a more accurate description. This enables extremely powerful applications such as Apple’s Siri, Amazon’s Alexa, IBM Watson, and self-driving cars.

👉Strong AI includes artificial general intelligence (AGI) and artificial superintelligence (ASI). Artificial general intelligence (AGI), or general AI, is a theoretical form of AI in which machines have intelligence comparable to that of humans. It will have a self-aware consciousness with the ability to solve problems, learn, and plan for the future. Artificial superintelligence (ASI), also known as superintelligence, will exceed the intelligence and capabilities of the human brain. Although powerful AI is still entirely theoretical and currently has no practical examples of its use, this does not mean that AI researchers are not considering developing it. On the other hand, the best example of an ASI may be in science fiction works such as HAL, the extraterrestrial and evil computer assistant from 2001: A Space Odyssey.

How does artificial intelligence (AI) work?

But decades before this definition, the conversation about artificial intelligence began with Alan Turing’s seminal book Computing Machinery and Intelligence, published in 1950 (link is located outside ibm.com). It was shown. In this paper, Turing often says: Known as the “Father of Computer Science, he asks the question, “Can machines think?” From there, he proposed a test now famously known as the “Turing Test.”. In this test, a human interrogator attempts to distinguish between computer and human text responses. Although this test has received much scrutiny since its release, it is still an important part of the history of AI as well as an ongoing concept within philosophy, as it leverages ideas about linguistics. .

Stuart Russell and Peter Nerving then published Artificial Intelligence: A Modern Approach (link off ibm.com), which became one of the leading textbooks in AI research. In it, they highlight four possible goals or definitions of AI that differentiate computer systems based on rationality, thinking, and action.

Human approach:

- Systems that think like humans

- Systems that act like humans

Ideal approach:

- Systems that think rationally

- Systems that act rationally

Alan Turing’s definition would fall into the category of “systems that behave like humans.”.

In its simplest form, artificial intelligence is a field that combines computer science with robust datasets to enable problem-solving. It also includes the subfields of machine learning and deep learning, which are often mentioned alongside artificial intelligence. These fields include AI algorithms that aim to create expert systems that make predictions and classifications based on input data.

Over the past few years, artificial intelligence has gone through several cycles of hype, but even for skeptics, OpenAI’s release of ChatGPT seems to be a turning point. The last time generative AI became this big, the breakthroughs were in computer vision, and now we’re seeing breakthroughs in natural language processing. And it’s not just language. Generative models can also learn grammars for software code, molecules, natural images, and many other types of data.

The applications of this technology are growing every day, and we are just beginning to explore its potential. But as the hype around the use of AI in business grows, the conversation around ethics becomes increasingly important. Learn more about IBM’s position in the AI ethics conversation.

👉Artificial intelligence programming focuses on cognitive skills such as:

- Learn: This aspect of AI programming focuses on taking data and creating rules to turn it into actionable information. Rules, called algorithms, provide step-by-step instructions for computing devices to accomplish a particular task.

- Logic. This aspect of AI programming focuses on selecting the appropriate algorithm to achieve the desired result.

- Self-correction: This aspect of AI programming is designed to continuously improve the algorithms and provide the most accurate results possible.

- Creativity. This aspect of AI uses neural networks, rule-based systems, statistical methods, and other AI techniques to generate new images, new text, new music, and new ideas.

Differences between AI, machine learning and deep learning

AI, machine learning, and deep learning are common terms in enterprise IT, especially when companies use them interchangeably in marketing materials. But there are differences too. The term AI was coined in the 1950s and refers to the emulation of human intelligence by machines. A constantly changing set of capabilities is incorporated as new technologies are developed. Technologies falling under the umbrella of AI include machine learning and deep learning.

👉For more about machine learning and deep learning

Machine learning allows software applications to more accurately predict outcomes without having to be explicitly programmed. Machine learning algorithms use historical data as input to predict new output values. This approach has become very effective as the number of large datasets on which it can be trained increases. Deep learning, a subset of machine learning, is based on an understanding of how the brain is structured. The use of artificial neural network structures through deep learning powers recent advances in AI, such as self-driving cars and chat GPTs.

Why is artificial intelligence so important?

AI is important because it has the potential to change the way we live, work, and play. It is used effectively by businesses to automate tasks performed by humans, such as customer service tasks, lead generation, fraud detection, and quality control. In many areas, AI can work much better than humans. Especially when it comes to repetitive, detail-oriented tasks, such as analyzing large amounts of legal documents to ensure that relevant fields are filled out properly, AI tools are often more than enough to get the job done. are faster and with fewer errors. Because AI can process large data sets, it can also give businesses insights into their operations that they were unaware of. The rapidly expanding audience for generic AI tools will become important in areas ranging from education and marketing to product design.

In fact, advances in AI technology have not only contributed to an explosion in efficiency but have also opened the door to entirely new business opportunities for some large companies. Before the current wave of AI, it was hard to imagine using computer software to connect passengers with taxis. But Uber became a Fortune 500 company by doing so.

AI is at the heart of today’s largest and most successful companies, including Alphabet, Apple, Microsoft, and Meta, which use AI technology to improve their operations and outperform their competitors. At Alphabet Inc.’s Google, for example, AI is at the heart of Google Brain, which invented the company’s search engine, Waymo self-driving cars, and electrical transformers.

Advantages and disadvantages of artificial intelligence

Artificial neural networks and deep learning AI technologies are developing rapidly. The main reason for this is that AI can process large amounts of data much faster and make more accurate predictions than humans.

While human researchers are overwhelmed by the vast amounts of data created every day, AI applications using machine learning can take that data and instantly transform it into actionable information. At the time of this writing, the main drawback of AI is the high cost of processing the large amounts of data required for AI programming. As AI technology is incorporated into more products and services, organizations must also pay attention to the potential of AI to create biased and discriminatory systems, intentionally or unintentionally.

Advantages of artificial intelligence

Some of the benefits of artificial intelligence include:

- Good at detailed work. AI has proven to be as good or better than doctors at diagnosing some cancers, such as breast cancer and melanoma.

- Spend less time on data-intensive tasks. AI is widely used in data-intensive industries such as banking, securities, pharmaceuticals, and insurance to reduce the time taken to analyze large data sets. For example, financial services routinely use AI to process loan applications and detect fraud.

- Labor savings and increased productivity. An example here is the use of warehouse automation. This has increased during the pandemic and is expected to increase with the integration of AI and machine learning.

- Provides consistent results. The best AI translation tools provide a high level of consistency and give small businesses the ability to reach customers in their native language.

- Increase customer satisfaction through personalization. AI can personalize content, messages, advertisements, recommendations, and websites for individual customers.

Disadvantages of artificial intelligence

- expensive.

- requires deep technical expertise.

- There is a limited supply of qualified workers to build AI tools.

- Reflect training data bias at scale.

- Lack of ability to generalize from one task to another.

- End human employment and increase unemployment.

Comparison of Deep Learning and Machine Learning

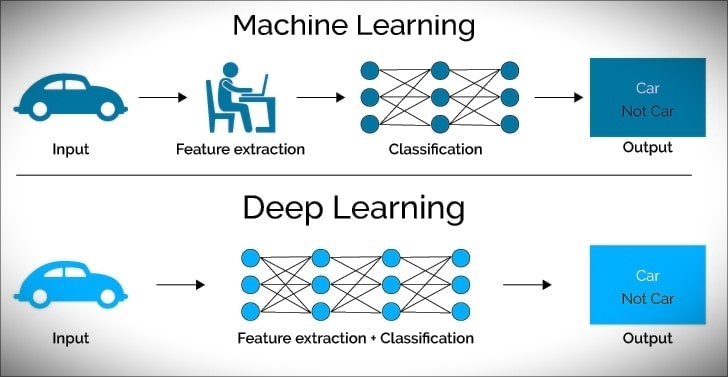

The terms deep learning and machine learning are used interchangeably, so it is worth paying attention to the nuances between the two. As mentioned earlier, deep learning and machine learning are both subfields of artificial intelligence, and deep learning is actually a subfield of machine learning.

Deep learning actually involves neural networks. “Depth” in deep learning refers to a neural network that has three or more layers with inputs and outputs and can be considered a deep learning algorithm. This is usually represented using the following diagram:.

The difference between deep learning and machine learning lies in the learning method of each algorithm. Deep learning automates much of the feature extraction part of the process, eliminating some of the necessary manual intervention and allowing the use of larger datasets. As Lex Friedman said above in his MIT lecture, deep learning can be thought of as “scalable machine learning.”. Classical, or “shallow,” machine learning relies heavily on human intervention to learn. Human experts determine a hierarchy of features to understand the differences between data inputs. Training usually requires more structured data.

“Deep” machine learning can take advantage of labeled datasets to inform algorithms, also known as supervised learning, but does not necessarily require labeled datasets. It can encapsulate unstructured data in its raw form (text, images, etc.) and automatically determine a hierarchy of features that distinguish different categories of data from each other. Unlike machine learning, processing data requires no human intervention, which allows you to extend machine learning in more interesting ways.

The rise of generative models

Generative AI refers to deep learning models that can take raw data (for example, the entire Wikipedia or a collection of Rembrandts) and “learn” to generate statistically probable outputs in response to prompts. Broadly speaking, generative models entail simplification.

Represent training data and extract quotes from it to create similar new tasks.

However, it is not the same as the original data.

Generative models have been used in statistics for many years to analyze numerical data. However, the rise of deep learning has made it possible to extend deep learning to images, audio, and other complex data types. The first class of models to accomplish this crossover feat was the Variable Autoencoder (VAE), introduced in 2013. VAE was the first deep learning model widely used to generate realistic images and sounds.

“VAE opens the door to deeper generative modeling by making it easier to create models.

Akash Srivastava, a generic AI expert at the MIT-IBM Watson AI Lab.

“A lot of what we think of as generic AI today started here.”

Early examples from models like GPT-3, BERT, and DALL-E 2 show what is possible. The future will have models trained on a wide range of unlabeled datasets that can be used for a variety of tasks with minimal fine-tuning. Systems that perform specific tasks in a single domain are being replaced by pervasive AI that learns more generally and works across domains and problems. Fundamental models trained on large, unlabeled datasets and fine-tuned for different applications are driving this change.

When it comes to generic AI, the underlying models are predicted to change dramatically.

Accelerate AI adoption in your enterprise. Reducing labeling requirements would lead to significant improvements

The accuracy and efficiency of AI-powered automation enabled by AI will enable far more companies to deploy AI in a wider range of mission-critical situations. For IBM, the hope is that the power of the foundational model will eventually be available to all businesses in a frictionless hybrid cloud environment.

👉You should know more about artificial intelligence.

AI tools and services

AI tools and services are evolving rapidly. Current innovations in AI tools and services began with 2012’s AlexNet neural network, which ushered in a new era of high-performance AI built on GPUs and large data sets. The main change is that neural networks can now be trained in parallel on large amounts of data across multiple GPU cores in a more scalable manner.

In recent years, the symbiotic relationship between AI discoveries by Google, Microsoft, and OpenAI and hardware innovations pioneered by Nvidia has enabled the ability to run larger AI models on more connected GPUs, leading to increased performance. Revolutionary improvements were made in scalability.

The collaboration of these AI giants, not to mention dozens of other successful AI services, has been crucial to the recent success of ChatGPIT. Below is an overview of key innovations in AI tools and services.

Transformer. For example, Google has led the way in finding a more efficient process for providing AI training across large clusters of general-purpose PCs with GPUs. This led to the discovery of transformers that automate many aspects of training AI on unlabeled data.

Hardware Optimization. Equally important, hardware vendors like Nvidia are also optimizing their microcode to run on multiple GPU cores in parallel for the most common algorithms. Nvidia claims a million-fold improvement in AI performance through a combination of faster hardware, more efficient AI algorithms, fine-tuned GPU instructions, and better data center integration. Nvidia is also working with all cloud center providers to make this capability more available as AI-as-a-Service through IaaS, SaaS, and PaaS models.

Pre-trained generator transformer. The AI stack has also evolved rapidly over the past few years. Previously, companies had to train AI models from scratch. Vendors such as OpenAI, Nvidia, Microsoft, and Google are increasingly offering generative pre-training transformers (GPTs) that can be fine-tuned for specific tasks, significantly reducing cost, expertise, and time. Some of the largest models are estimated to cost $5 million to $10 million per run, but companies can improve the resulting models for a few thousand dollars. This increases time to market and reduces risk.

AI cloud service. One of the biggest obstacles preventing companies from effectively leveraging AI in their businesses is the data engineering and data science work required to incorporate AI capabilities into or develop new apps. All major cloud providers are deploying their own brands of AI as a service to streamline data preparation, model development, and application deployment. Prominent examples include AWS AI Services, Google Cloud AI, Microsoft Azure AI Platform, IBM AI Solutions, and Oracle Cloud Infrastructure AI Services.

State-of-the-art AI models as a service. Leading AI model developers also offer cutting-edge AI models on these cloud services. OpenAI has several large-scale language models optimized for chat, NLP, image generation, and code generation, provisioned through Azure. Nvidia has taken a more cloud-agnostic approach by selling optimized AI infrastructure and base models for text, images, and medical data available on all cloud providers. Hundreds of other players also offer customized models for different industries and use cases.

follow me : Twitter, Facebook, LinkedIn, Instagram

28 thoughts on “What is artificial intelligence? Applications, uses and best example”

Comments are closed.