Natural language processing is a computer program that is able to understand human language in written or voice format. Natural language is part of artificial intelligence. Natural language processing is used in many fields, like medical departments, search engines, automobiles, and many more.

What is natural language processing?

Natural language processing is a subset of artificial intelligence that is used in computer science to understand the human voice and text.

It is used in everyday life, like voice digital assistant products, email programs, translating applications, and understanding another language.

The evolution of natural language processing

NLP draws on a variety of disciplines, including mid-20th-century developments in computer science and computational linguistics. Its development includes major milestones such as:

1950s.

The roots of natural language processing go back to the last decade, when Alan Turing developed the Turing Test to determine whether a computer is truly intelligent. This test incorporates automatic interpretation and natural language generation as measures of intelligence.

From 1950 to 1990.

NLP was primarily rule-based, using hand-crafted rules developed by linguists to determine how computers should process language.

1990s.

Advances in computing have allowed NLP techniques to be developed more efficiently, replacing the top-down, language-first approach to natural language processing with a more statistical approach. Computers have become faster and can now be used to develop rules based on language statistics without the need for linguists to write all the rules. Over the past decade, data-driven natural language processing has become mainstream. Natural language processing is moving from a linguist-based approach to an engineer-based approach that is based on broader scientific disciplines rather than going deeply into linguistics.

From 2000 to 2020.

The term Natural Language Processing is rapidly gaining popularity. With advances in computing power, natural language processing is finding many applications in the real world. Currently, NLP approaches involve a combination of classical linguistics and statistical methods.

Natural language processing plays a vital role in technology and the way humans interact with it. It is used in many real-world applications in both business and consumer domains, such as chatbots, cybersecurity, search engines, and big data analytics. Although NLP is not without its challenges, it is expected to remain an important part of both industry and daily life.

Related topics about artificial intelligence: what you should know about

Natural language processing technology

Natural language processing has a wide range of technologies for analyzing human language. There are some common techniques that are described here:

- Sentiment analysis: This is the technique of NLP that analyzes text, whether positive or negative. It is commonly used for business feedback to be understood by customers.

- Summarization: This technique summarizes the longer text or sentences to make them easier to understand. Complicated words summarize to a normal word, which is more manageable by the natural language processing techniques. Common word like text or article.

- Keyword extraction: Natural language processing techniques summarize and identify the important keyword. These keywords are mostly used for search engine optimization, social media monitoring, and business purposes.

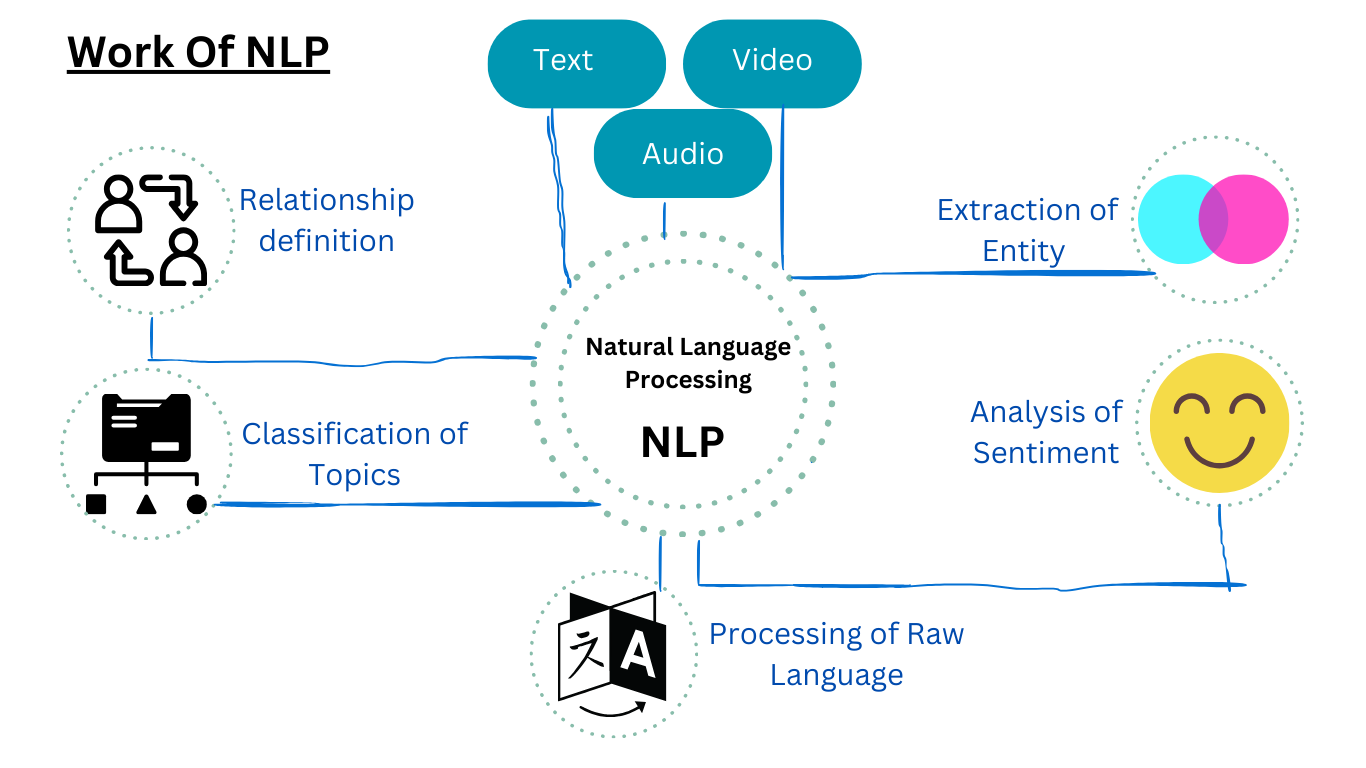

How does natural language processing work?

Natural language processing allows computers to understand natural language just like human language. Languages like written, verbal, and NLP transform into computers that, with the help of artificial intelligence, can take real input and process it. Computers have microphones and programs to hear, read, and process input language, like humans sensors and brains. NLP processes the input and converts it into code that the computer can understand.

Natural language processing has two main steps to understand: data preprocessing and algorithm development.

Data preprocessing: This is the process of preparing the data and cleaning text data so that the machine can easily analyze it. Data transformation into a usable format highlighted the features that algorithms can use during transformations. These processes occur in several ways.

- Tokenization: During tokenization, text is divided into several parts.

- Remove punctuation: In this level, remove the common words from the text and accept the unique words to provide more information about the text.

- Lemmatization and stemming: This is when you bring words down to their original form and act on them.

- Tagging the text into part of speech: In this level, words are marked on the basis of their parts of speech like nouns, pronouns, verbs, adjectives, etc.

Once the data is preprocessed, algorithms are developed to process it. There are many different types of natural language processing algorithms, but two main types are commonly used:

A rule-based system

This system uses carefully designed language rules. This approach was used early in the development of natural language processing and is still used today.

Machine-learning-based systems

Machine-learning algorithms use statistical methods. They learn to act on given training data and adjust their methods as more data is processed. Natural language processing algorithms that combine machine learning, deep learning, and neural networks improve their unique rules through repeated processing and learning.

Why is natural language processing important?

Businesses are using large amounts of unstructured, text-rich data and need ways to process it efficiently. Most of the information created online and stored in databases is human natural language, and until recently, companies have not been able to analyze this data effectively. This is where natural language processing comes into play.

Two benefits of natural language processing are: “Cloud computing insurance should be part of every service level agreement” and “With the right SLAs, you can be assured of a good night’s sleep in the cloud.” We can see this by considering this statement. When relying on natural language processing, the program recognizes that cloud computing is an entity, cloud is an abbreviation of cloud computing, and SLA is an industry abbreviation for service level agreement.

These are ambiguous elements that often appear in human language, and machine learning algorithms have historically been poor at interpreting them. Deep learning and machine learning techniques have now improved so that algorithms can interpret them effectively. These improvements have expanded the breadth and depth of data that can be analyzed.

What is natural language processing used for?

Some of the main tasks performed by natural language processing algorithms are:

- Text classification involves assigning tags to text to classify it. This is useful for sentiment analysis, which helps natural language processing algorithms determine the sentiment behind text. For example, if brand A is mentioned in X texts, the algorithm can determine how many of those mentions are positive and how many are negative. It also helps in detecting intention and predicting what the speaker or writer will do based on the text they produce.

- Text extraction: This includes automatic text summarization and searching for important data. An example of this is keyword extraction, which extracts the most important words from text. It helps with search engine optimization. Doing this using natural language processing requires some programming and is not fully automated. However, there are many useful keyword extraction tools that automate much of the process. Users only need to set the parameters within the program. For example, a tool might extract the most frequently used words in text. Another example is named entity detection, which extracts names of people, places, and other entities from text.

- Machine translation: This is the process by which a computer translates text from one language, such as English, to another, such as French, without human intervention.

- Natural language generation involves using natural language processing algorithms to analyze unstructured data and automatically generate content based on that data. An example of this is a language model like GPT3 that can analyze unstructured text and generate authoritative text based on that text.

The above functions are used in various real-world applications, such as:

- Customer Feedback Analytics: AI analyzes social media reviews.

- Customer Service Automation: Enable a voice assistant on the other end of your customer service phone line to understand what your customers are saying and use voice recognition to correctly route the call.

- Automatic translation: Use tools like Google Translate, Bing Translate, and Translate Me.

- Academic research and analysis: AI can analyze large amounts of academic content and research papers based on text, not just text metadata.

- Analyzing and classifying medical records: AI uses insights to predict and, ideally, prevent diseases.

- Word processors are used for plagiarism and proofreading using tools such as Grammarly and Microsoft Word.

- Stock Predictions and Financial Trading Insights: Uses AI to analyze market history and 10-K documents to provide a comprehensive overview of a company’s financial performance.

- Recruitment for the Human Resources Department. And

- Automation of routine litigation tasks—one example is artificially intelligent lawyers.

Most of the research being done on natural language processing revolves around search, especially enterprise search. This involves querying data sets in the form of questions that users can ask other users. The machine interprets key elements of human language sentences that correspond to specific features in the dataset and returns an answer.

NLP allows you to interpret and analyze unstructured, free text. Free text files, such as patient medical records, store large amounts of information. Before the advent of deep learning-based NLP models, this information was inaccessible to computer-assisted analysis and could not be analyzed in a systematic manner. NLP allows analysts to sift through large amounts of free text to find relevant information.

Sentiment analysis is another major use case for NLP. Data scientists use sentiment analysis to evaluate comments on social media to see how a company’s brand is performing or review notes from customer service teams to identify areas where people want to improve their performance.

What is the benefit of natural language processing?

If you are using natural language processing for language translation, it is quickly performed to translate text to another language. It is usable by businesses and consumers alike.

Common benefits of NLP include:

- It is useful to analyze structured and unstructured data with voice, text, and social media-related information.

- Improve customer satisfaction and experience by identifying insights using sentiment analysis.

- Reduce costs by employing NLP-enabled AI to perform specific tasks, such as chatting with customers via chatbots or analyzing large amounts of text data.

- Gain a deeper understanding of your target market and brand by performing NLP analysis on relevant data such as social media posts, focus group surveys, and reviews.

Limitations of natural language processing

Natural language processing is used in a wide area, but it has some limitations. There are so many NLP tools that perform scary language, sentiments, slang, sentence errors, and other types of unhealthy language. It means NLP has limited or well-defined situations.

Challenges of natural language processing

There are many challenges in natural language processing, most of which are based on the fact that natural language is constantly evolving and always contains some degree of ambiguity. they include:

- Accuracy: Computers have traditionally needed to “talk” to humans through precise, clear, and highly structured programming languages, or through a limited number of clearly spoken voice commands. However, human speech is not always accurate. The often ambiguous language structure may depend on many complex variables, such as slang, regional dialects, and social context.

- Tone and tone of voice: Natural language processing is not finished yet. For example, semantic analysis may still be a challenge. Other problems include the fact that programs using abstract languages are usually difficult to understand. For example, natural language processing cannot easily detect sarcasm. These topics usually require understanding the words used in conversation and their context. As another example, the meaning of a sentence can change depending on which words or letters the speaker emphasizes. NLP algorithms can miss subtle but important intonation changes in a person’s voice when performing speech recognition. The tone and intonation of voice may also vary between accents and may be difficult for algorithms to distinguish.

- Development of language use: Natural language processing is also challenged by the fact that languages and the way people use them are constantly changing. Languages have rules, but none of them are written down in a fixed form and can change over time. The strict computational rules that work today may become obsolete as the characteristics of real-world languages change over time.

Examples of Natural language processing

Natural language processing can be like a science novel, but people are already using and interacting with NLP tools and services every day of their lives.

For example, chatbots use NLP to connect with consumers and provide solutions directly to them with suitable products and related resources. Although chatbots can’t reply to all consumer questions, they can provide solutions to common issues and troubleshoot problems.

Another type of example we are using in NLP is text autocorrect. If you want to send any type of message to another person and you get so many errors for spelling, then this technology provides a solution to autocorrect for you with a spell check and provide correct spelling and sentences for a particular word or sentences.

Frequently ask questions

What are natural language processing models?

Natural language processing models are computer programs that can translate, summarize, analyze sentiment, and carry out other activities using natural language input, such as speech or text. The majority of NLP models are built using deep learning or machine learning methods that use a lot of linguistic data to learn.

What are the types of NLP models?

There are two primary categories of NLP models: statistical and rule-based. Rule-based models produce and analyze natural language data by using dictionaries and predetermined rules. To learn from language data and provide predictions, statistical models employ data-driven methodologies and probabilistic techniques.

What are the challenges of NLP models?

Natural language is diverse and complicated, which presents numerous obstacles for NLP models. Ambiguity, variability, context-dependence, metaphorical language, noise, domain-specificity, and a deficiency of labeled data are a few of these difficulties.

What are the applications of NLP models?

Search engines, chatbots, voice assistants, social media analysis, text mining, information extraction, natural language generation, machine translation, speech recognition, text summarization, question answering, sentiment analysis, and more are just a few of the many fields and industries in which NLP models find extensive use.

follow me : Twitter, Facebook, LinkedIn, Instagram

20 thoughts on “What is natural language processing? Definition, advantages and examples”

Comments are closed.